The hottest trends to come out of Foundry Live 2021

We’ve wrapped our first Foundry Live event of 2021, sponsored by Lenovo and AMD—and it certainly kicked off with a bang!

Foundry Live 2021 covered two product releases, Nuke 13.0 and Modo 15.0, an exclusive view of our look development and lighting roadmap, discussions about the latest innovations and tech trends, and much more.

In case you missed out on all the excitement, we’ve narrowed down the hottest trends and highlights to come out of the event.

So let’s dive in.

Reducing Pipeline friction with USD

Stepping into the spotlight first were the Katana and Mari teams, giving insight into their roadmap for Look Development and Lighting. With some recent internal movement, the two teams are joining forces in a bid to remove pipeline friction for artists moving from one application to the other.

USD, or Universal Scene Description, is a key part of this and likely to be a vital part of building pipelines in the future. With this in mind, the teams wanted artists to be able to leverage USD to allow Mari and Katana to work better together.

As part of this effort, Katana recently saw the introduction of a USD preview surface which was added as a new shader type. This allows artists to develop a preview alongside their assets’ primary render shader. To extend this, the Mari team is also looking to introduce a mechanism that allows artists to export this USD preview surface alongside detection maps and USD shade look so the look document can be brought into Katana—all with the aim to break the barriers between the two products.

Pipelines, as we know, are changing, and while in traditional VFX and animation pipelines, lighting came at the end of production, this is beginning to shift. As it does, Katana is also moving with the times and evolving to match this shift in the industry. Departments want to sign off work with better look development and lighting, and supervisors want to see something close to the final quality shot.

Katana 4.0 saw the start of this journey, adding the ability to export the materials it makes for the viewer and renderers to USD Preview Surface and USD Shade data. This USD integration is the key to improving the quality of deliverables from other departments by sending the look development back upstream. The team hopes that in the future they’ll be able to do the same with lighting as well.

Interoperability between Mari and Katana isn’t the only exciting possibility seen at Foundry Live. Both the Katana and Nuke team teased interop between the two products with the ability to composite using Nuke live inside of Katana, which the Nuke team expanded upon in their talk, covered below.

Getting artists closer to the final image

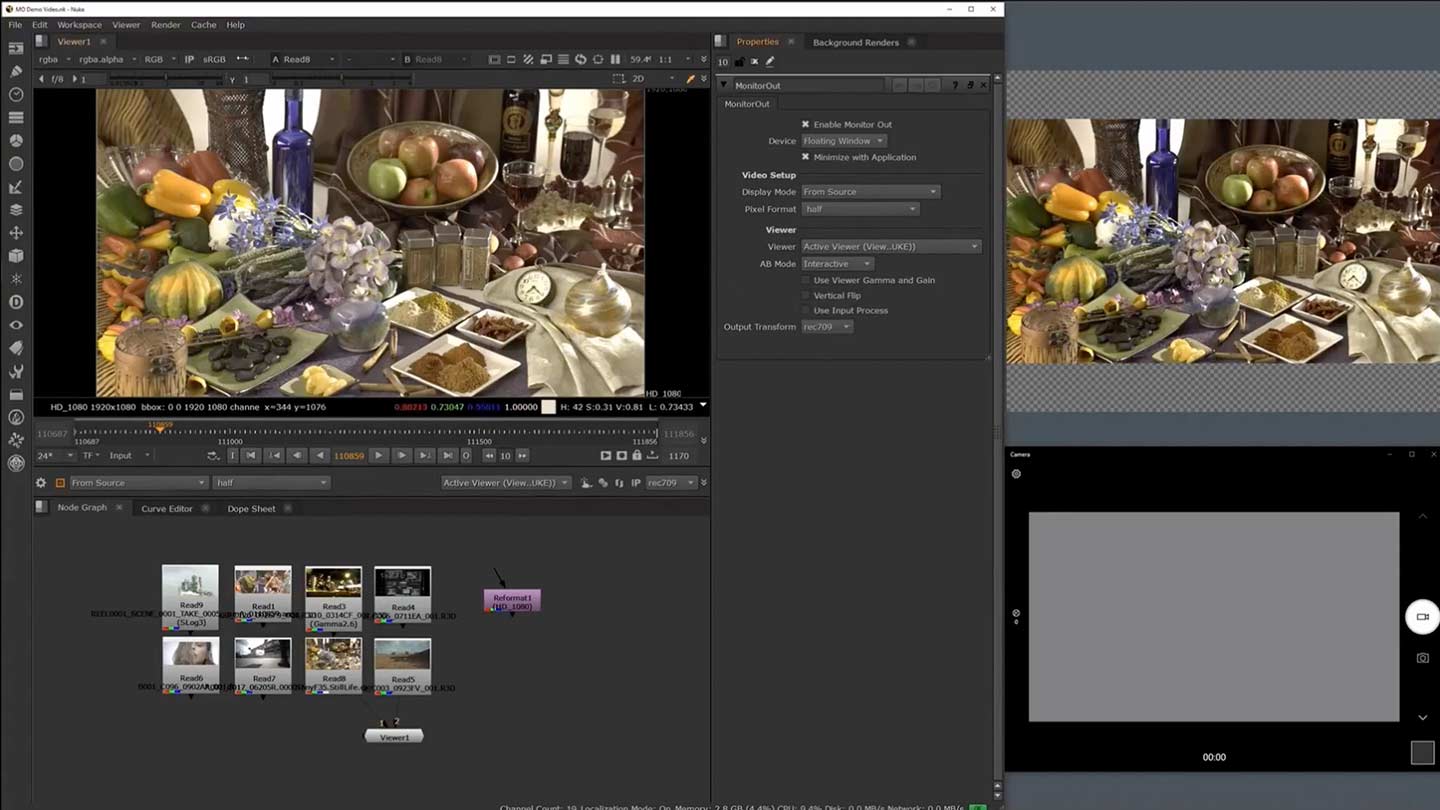

Recent release Nuke 13.0 held center stage for the Nuke team in their event, showing how it empowers artists and helps them create amazing work. Amongst the latest features are Extended Monitor Out, updated Hydra viewport using hdStorm, USD Support for Camera, Lights and Axis, and Sync Review which is no longer a beta feature, so artists can work remotely with ease.

The A.I.R plug-ins were also a hot topic, with a new machine learning toolset being integrated into Nuke. This includes CopyCat and Inference which allows artists to train neural networks, as well as the Upscale and Deblur tools which were pre-trained for artists.

Nuke 13.0 wasn’t the only topic of conversation for the Nuke team. They also looked to the future and what’s to come for the industry-standard compositing toolset.

With lighting and compositing being sister disciplines, artists often find they’re using both Katana and Nuke side-by-side. That’s why a big part of the future plan for Nuke is the Katana > Nuke Interop, as mentioned above.

The Katana team has developed a very early proof of concept of live rendering inside of Katana using the Foresight Rendering system, which you can see in both the Nuke and Look Development and Lighting sessions. This will allow lighters to work directly in the context of their Nuke comp within Katana and start to remove the friction between these two tools.

The team also hinted at some other exciting features to come in Nuke like GENIO—Game Engine Interop, the integration of OCIO v2 and updates to the nodegraph like Shake to disconnect.

Looking to an innovative future

In our Key Forces and Technologies Driving Change in the VFX Industry webinar, Dan Ring and Mathieu Mazerolle from Foundry’s Research team, stepped into the future to look at the tech which is set to change how we work for good.

They discussed the new trends which are currently disrupting the way we work, the main of which is the change in attitudes towards remote work after COVID-19. We’re starting to see the advantages of working from home, and how, in the more-than-likely event we continue to work in this way, creative workflows need to adapt—quickly.

This is much the same for virtual production. As it becomes increasingly clear it is here to stay, this new way of working is another trend that’s set to disrupt the entire production process. Cloud, a nuanced trend also set to change the game, is seeing a gradual increase in use despite the fact we’ve still not completely realized the full potential of this technology, and therefore its benefits are continuing to be realized

With these trends kicking up a storm, it’s clear the industry needs to quickly evolve to keep up with the huge demand for content. So, just how are we going to meet all the challenges that are stimulated by these trends?

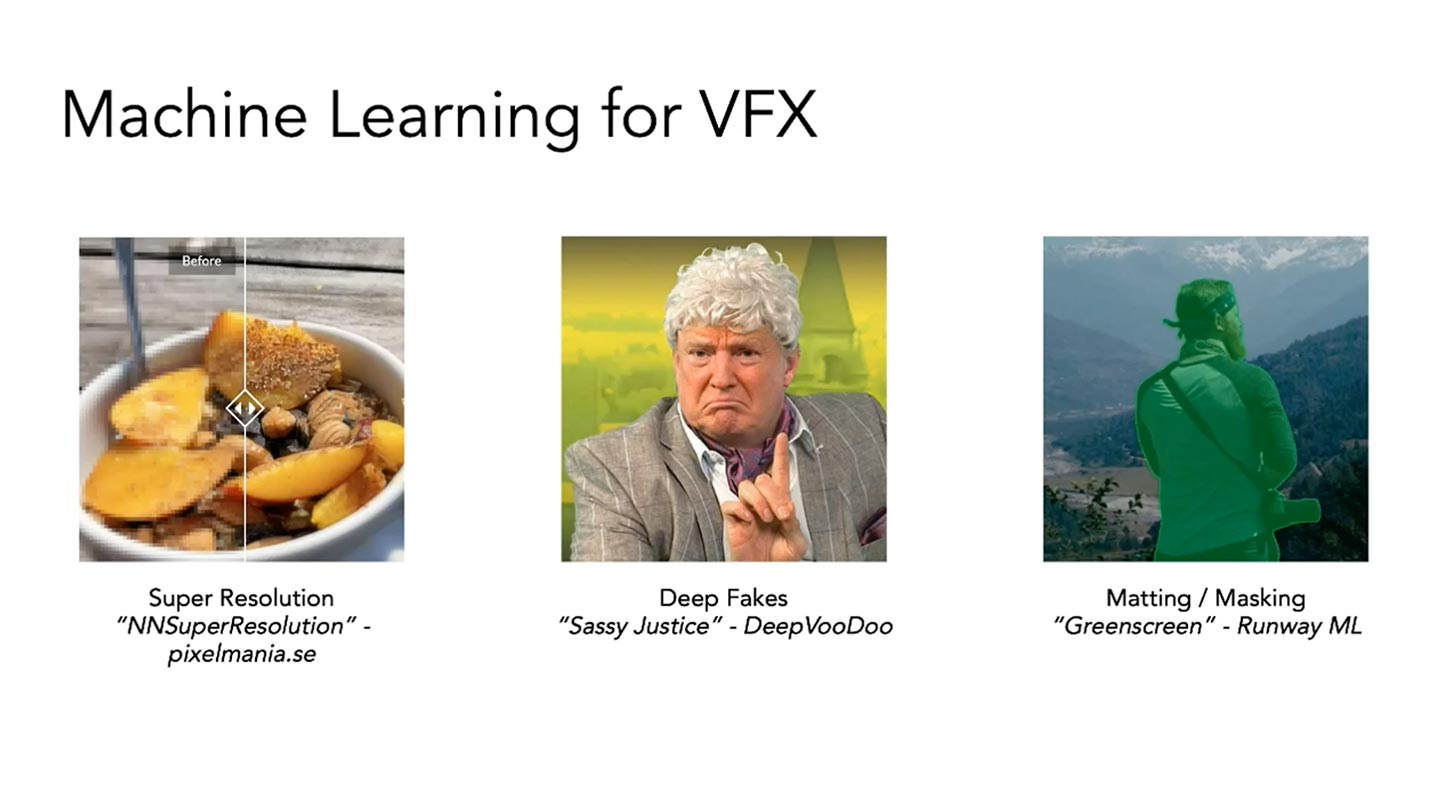

As Dan and Mat see it, there are three big solutions: machine learning, real-time workflows and distributed data, which all have a role to play in ensuring we have efficient workflows of the future.

Machine learning is already a hot topic in the industry and aims to empower artists by giving them total control over their creative work. Meanwhile, real-time blurs the line between traditional production processes and enables artists to work in the context of the final image.

Supporting them both is distributed data, which alters how data moves through a pipeline. This ensures artists can process the masses of data that often comes with real-time and machine learning and can crunch it at scale.

It’s clear that these three tech trends are at the forefront of future workflows, which is why the Research and Product teams at Foundry have started to make serious moves into these areas—just take Nuke’s new machine learning toolset as an example. To find out more about what Foundry has planned, head to the Nuke and Look Development & Lighting talks for an exclusive look.

Enhanced design workflows

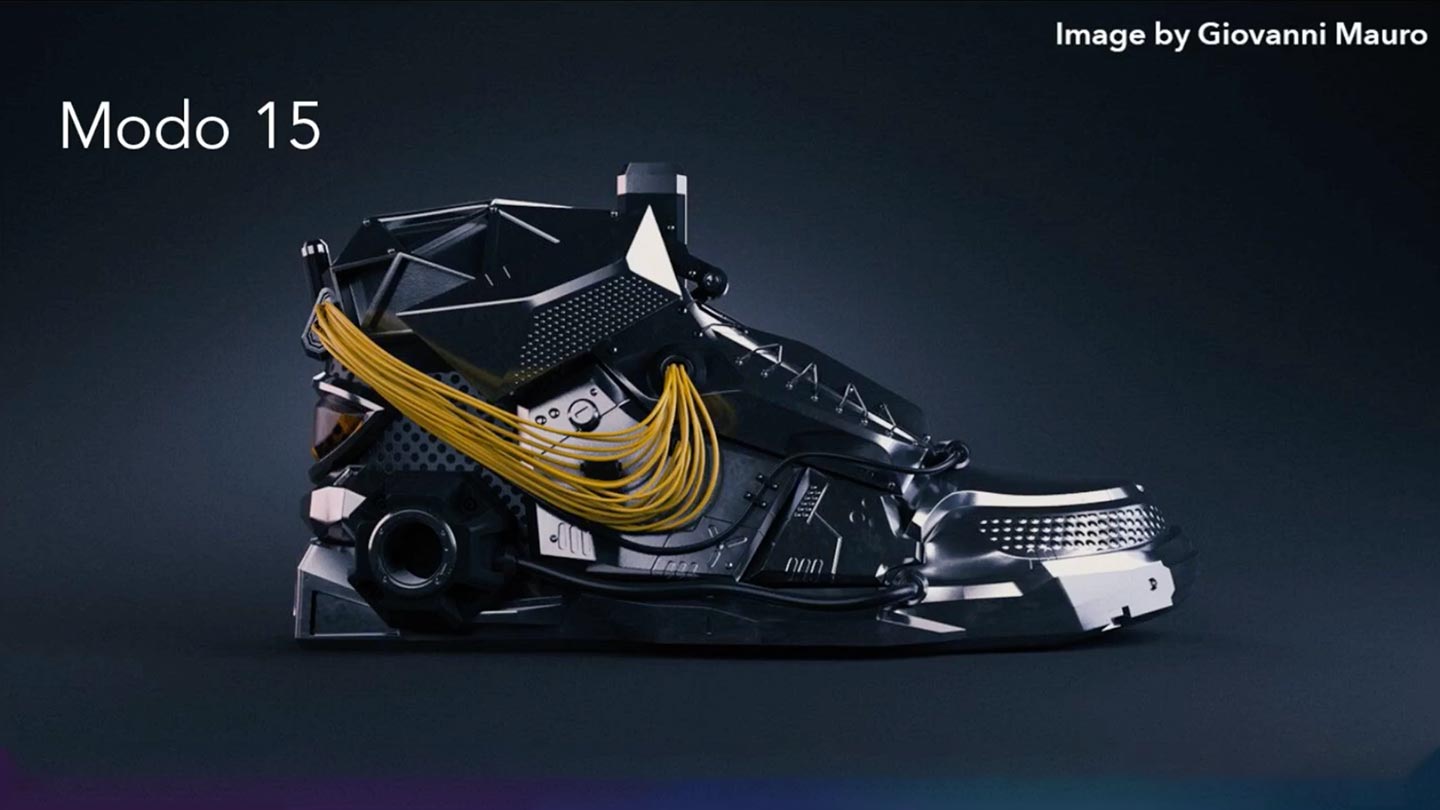

In our penultimate event, we took a deep dive into Modo 15.0, the first in the next series of releases. The Modo team was joined by a panel of special speakers as they uncovered their favorite features of the release and showcased them through demos.

One of the main focuses of this event was the updates that have been made to rendering in Modo. Quick Cam is one of the exciting features that dropped in this space, allowing for interactive camera navigation during an empath render—Modo’s new path trace which allows for more interactive workflows for artists.

And this is just the start; the team has a lot more planned in the pipeline to improve the Modo rendering experience.

There was also a focus on animation, a hot topic in the industry right now. As part of Modo 15.0, updates have been made to Rig Clay which was introduced in Modo 14.0. The 2D model view now supports command regions and allows for more flexibility in the types of rigs you can create for your animation projects. Plus, there have also been updates to Modo’s animation editor and some much welcome UI enhancements, including a new layout for the lower collapsible graph editor viewport.

It’s great to see the passion the Modo team has for their product, so much so that the Foundry Live event was only half of the discussion. You can see the full talk on Modo Geeks and get the detailed scoop on all the must-have features and demos from the brilliant panel.

The importance of education

Wrapping up Foundry Live for this year was our Education Summit: Solving Tomorrow’s Challenges, Today. This took a look at the innovative work happening at Foundry through the eyes of educators, and how the work happening in machine learning and real-time could impact them.

What’s more, it also looked into the changes we’ve made to our educational licenses in an effort to support everyone impacted by COVID-19. Whereas before we sold licenses to schools for lab work and VPN access for students, now, to accommodate the move to work from home, we offer educational license keys.

This key is valid for the same timeframe as your school’s institutional license and allows students to easily install Nuke, Katana, Mari and Modo onto their computers, without needing to go through long verification processes. Any students who are at a school where they don’t teach foundry products can also apply for an individual license, and these will be approved on a case-by-case basis—we want to ensure that all educational institutions and students are supported as much as possible.

The Education Summit also shone a light on color management and our upcoming training in partnership with Netflix and Victor Perez. This training offers an in-depth look at color management and ACES color workflows in Nuke. The first training installment dropped on 31st March 2021, alongside a webinar with Victor Perez and Carol Payne, Imaging Specialist at Netflix.

If you missed out on any of Foundry Live, you can watch them on-demand today and catch-up on all the exciting insights given by our fantastic speakers.

We still have lots of exciting events coming soon including Foundry Skill Up: An Artist's Guide to Blinkscript, so be sure to keep an eye out and sign-up for any future events.