This is why eye-tracking in VR is about more than foveated rendering

Of all the potential developments touted as a turning point for VR, few have been as eagerly anticipated as eye-tracking.

Eye-tracking is the ability to measure what a person is looking at inside a VR headset. As we’ve previously explored, it’s generally spoken about in the context of foveated rendering, which can make VR much more computationally - and therefore cost - effective (Foundry's own Modo VR already uses foveated rendering on the current crop of HMDs to save on processing power, for example).

Look beyond the big headlines around foveated rendering however, and you’ll discover a host of other exciting applications for eye-tracking.

Varifocal displays

Even though the optical systems used in today’s VR headsets are improving all the time, they’re still just a very rough approximation of the human vision system.

Because of this, there’s a raft of things we take for granted about our vision that have yet to be accurately replicated in VR technology. Foveated rendering is one of those things: dynamic focus is another.

Being able to adjust our focus on objects that are either near or far away is an intrinsic part of human sight - one that VR headsets are currently unable to match.

That’s because the lenses in a VR headset always focus at a fixed distance, even when the stereoscopic depth suggests otherwise. This leads to an issue called vergence-accommodation conflict.

The way around this problem lies in the development of varifocal displays which can dynamically alter their focal depth.

In their simplest form, these involve an optical system where the display is physically moved backwards and forwards from the lens in order to change focal depth on the fly.

You need eye-tracking to achieve this because the system needs to know exactly where the user is looking to get the correct focus.

A line is traced from each of the user’s eyes into the virtual scene to the point they’re looking at. Where those lines meet is where the right focal plane is. This information is relayed to the display, which adjusts accordingly to match the focal depth of the virtual distance from the user’s eye to the object.

Done well, varifocal displays could both cancel out vergence-accommodation conflict and also enable users to focus on virtual objects much closer to them than currently possible in existing headsets.

And even if fully-functioning varifocal displays are some way off, before then eye-tracking could be used to more accurately simulate the human vision system depth of field to better approximate the blurring of objects outside of the focal plane of the user’s eyes.

Foveated displays

Foveated displays offer a different solution to the same challenge that foveated rendering aims to address: replicating the way our eyes show the things we’re focusing on with more clarity than things in our peripheral vision.

Where foveated rendering tries to achieve this by concentrating more rendering power on the part of our vision where we can see sharply (and less on our low-detail peripheral vision), you can achieve a similar effect by upping the pixel count.

This concept involves a smaller, more pixel-dense display, which is physically moved to wherever the user is looking (via eye-tracking) to create a sharper picture in that area.

Using a small, dynamic display like this would enable much higher resolution without resorting to the clumsy method of stuffing pixels at higher resolutions across the whole field of view.

Even better, this approach could lead to higher fields of view than could otherwise be achieved with a single flat display.

Foveated displays are already being worked on by innovative companies like Varjo. Varjo have superimposed a pixel-dense micro-display over the top of a much less pixel-dense display with a wide field of view. Combining the two gives the user an area of very high resolution for their foveal vision, and a wide field of view for their peripheral vision.

Right now, the smaller display just hangs out at the center of the lens, but the company is looking into different ways to manoeuvre the display so the high resolution area always tracks to where you’re looking.

Biometric identifiers and adjustments

Most people are familiar with the concept of using body parts to confirm identity (you might be reading this on a smartphone you accessed by scanning your fingerprint or retina, for example.)

It’s also possible to confirm identity using biological characteristics based on human behavior: the gait of a person’s walk or the way their eyes move when observing something.

Incorporating this kind of eye tracking technology into VR could provide a way to distinguish between different people using the same headset.

VR systems could automatically identify individual users and bring up their customized environment, including things like game progress and settings. Pass the headset to the next person, and their preferences and saved data would be loaded.

Similarly, eye tracking can be used to measure interpupillary distance (IPD): the distance between a person’s eyes. That’s important in VR, because you need to get it right in order to move the headset lenses and displays into the correct position, both for maximum comfort and visual quality.

Most people don’t know what their IPD is, but using eye tracking, a VR system could instantly measure a user’s IPD and then present instructions on-screen to help them adjust the headset to the optimal setting.

Eventually, headsets could be so advanced this process would happen automatically, with the IPD measured and adjusted without the user having to do a thing.

Virtually grabbing and inputting

In addition to existing methods such as Plane Selection, eye-tracking could also be used as a means to ‘grab’ virtual objects and perform other tasks faster and more efficiently.

Jedi-style, ‘force pulling’ has been a feature of VR applications for some time (gesturing at an object and having it come to you), but eye-tracking could make it far more accurate.

Our eyes are actually a lot better at pointing at objects in the distance than something like a laser pointer would be, because the natural shakiness of our hands is magnified over long distances.

Similarly, pressing buttons, selecting options, and inputting data in VR is likely to be a lot faster and more effective using eye-tracking than it would be using your hands. For this reason, eye-tracking is likely to play a pivotal role if VR is to become a genuinely productive computing platform.

Research and healthcare

VR has already been making headway in healthcare, with companies like SyncThink harnessing the eye-tracking capabilities of headsets to detect concussions.

The early results of testing this use case have indicated that the efficiency of on-field diagnosis is increased using this method.

Researchers have used eye tracking in VR headsets to collect data in a range of different scenarios. Interestingly, even something as simple as catching a ball reveals some surprising results about how our brain uses eyesight to coordinate our movements.

When you look at something even more complicated - like the role gaze plays for a pianist playing complex sequences of notes - the picture is even more intriguing.

You might expect a pianist would constantly be tracking his fingers to hit notes accurately, but instead eye-tracking in VR has revealed the player’s eyes are darting around, often looking at the next note to be played in the sequence rather than the current one -or returning to the point between his hands so he can gather data from his peripheral vision.

This is important, because the more we know about human perception, the better our ability to create immersive virtual and augmented worlds will become.

More lifelike social avatars

You’re probably familiar with the statistic that body language accounts for over half of communication (compared to just 7% for the words we speak).

Many of the avatars you see in today’s social VR applications are designed to look like they’re interacting more realistically, with lifelike eye movements such as blinking, saccades, and object focus.

All of these are simulated using animations and pre-programmed logic however. While this creates the illusion of real responses to make the avatar seem less robotic, it’s just that - an illusion, without any real non-verbal information conveyed.

Eye-tracking has the potential to significantly change this. By monitoring when a user blinks or where they’re looking, accurate data can be transferred to VR avatars to better represent the users non-verbal cues.

These could include those clues that are conscious and those that are unconscious, including winking, squinting, and pupil dilation. Eye tracking could even help to infer specific emotions like surprise or sadness, which in turn could be mirrored on the avatar’s face.

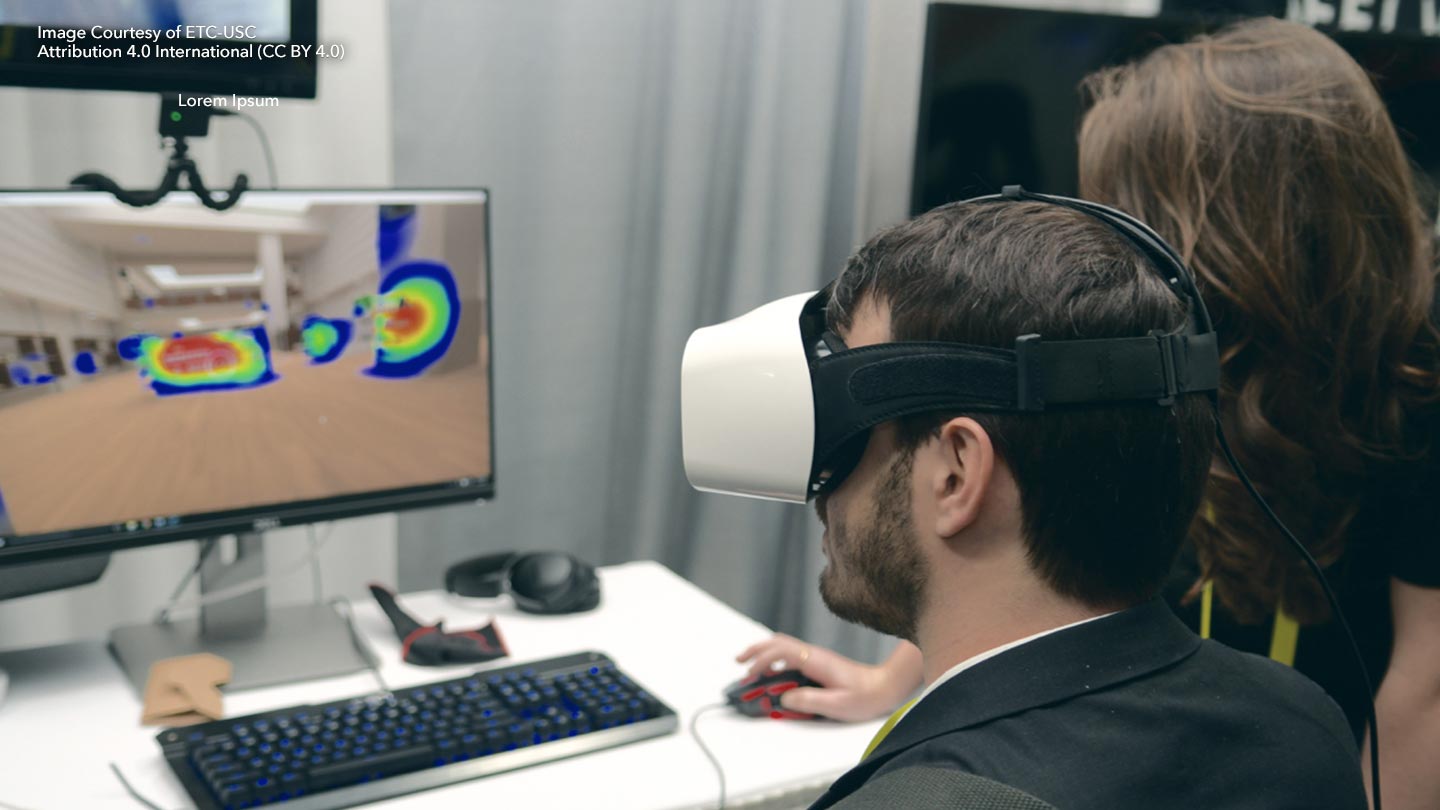

Analytics and user intent

Being able to accurately understand where a user is looking has far-reaching implications for virtual reality storytelling.

Imagine you’re a developer making a VR experience about a soldier going from room to room in a house, checking for booby traps.

Previously, the developer might have put a lot of time and effort into scripting a sequence where a hidden enemy bursts from a closet - only for the user to miss it because they weren’t looking in the right place.

By tracking the user’s eyes, the event can be triggered at precisely the right moment for the best effect - absolutely crucial for a sense of immersion, cohesiveness and satisfaction (how jarring would it feel to walk through a VR experience and just miss all the best bits?)

Brands are already paying attention to the potential VR eye-tracking could hold for them.

Kellogg’s recently used virtual reality and eye tracking technologies to ascertain how best to position its products in supermarkets. Consumers were immersed in a full-scale simulated store, tasked with picking up products and putting them in shopping carts.

After adapting their product placement based on what they found out using the technology, the company saw sales rise by almost a fifth. Impressive results like this are bound to see other brands follow suit, which could revolutionise the way in-store product placement is designed.

Eye tracking will be a VR game changer

The examples we’ve explored here are just some of the potential ways eye-tracking technology could be put to use.

Because of the possibilities it holds, it continues to be a key focus of research.

And as for the future, we’ll probably see eye-tracking integrated into more expensive, higher-end VR headsets first, before eventually becoming a regular feature of all VR hardware.