Jakub Knapik, Lighting and FX Art Director on Cyberpunk 2077, talks us through his creative process in this exclusive Q&A

Known for their open-world role-playing games, Polish studio CD PROJEKT RED (CDPR) are connoisseurs of creating visually breath-taking worlds capable of transporting players to entirely new realities. Their latest release, Cyberpunk 2077, offers up Night City as a new haunt to be explored, immersing players in a world that is as decorative as it is dangerous.

Spearheading the creation of Night City was Jakub Knapik, Lighting and FX Art Director for Cyberpunk 2077. Tasked with leading the studio’s Lighting and FX teams armed with compositing tool Nuke, Jakub also worked very closely with the Rendering team on the development of CDPR’s updated REDengine, and the Nvidia team on RTX implementation for Cyberpunk 2077.

Yet Jakub’s career started outside of games, when he joined Platige Image after graduating from architecture studies. “The studio became my real home for many years,” he tells us. “It was where I grew into more of a ‘proper’ VFX Supervisor/Compositor/Look Development Artist, and where on occasion I would need to become a one man orchestra for a time.”

After Platige, Jakub struck out on his own as a freelance artist, working on a variety of projects—from video clips for Radiohead’s Thom Yorke to a promotional film with Neil deGrasse Tyson for The Martian.

“Artistically, it was a very fruitful time for me,” he comments. “I think this period of time is where I started to experiment a lot. I worked with amazing new people and was really open-minded, so when I got a call from CD PROJEKT RED, I didn’t hesitate. Video games are an absolutely amazing medium and I really wanted to dive into this opportunity. I just never predicted how new everything would be to me!”

We caught up with Jakub for a deep-dive into the depths of Night City—and how Nuke was used to create perhaps the most visually-arresting game of 2020.

Q: What makes CDPR a special place to work, and what do you enjoy most about your job? What gets you up every day?

A: For me, it’s the constant evolution innate to the games medium. One of the first things I learned from an experienced developer here at CDPR was that games, unlike films, are alive even after release. There is always an area you can improve, do differently, or optimize.

On top of that, games have a very specific set of limitations when you compare them to movies, for example. The medium is a dream come true for ambitious minds that search for different approaches, as these restraints are often strong creative stimuli. It reminds me of the VFX scene from years back when you had to work out new solutions, as every new project had a unique set of challenges to overcome. Games are in that spot now, rapidly evolving visually but also very much technologically. So among other tasks that are typically related to my position there is always this space for exploration, which is something I’ve always loved and valued as an artist.

Last but not least, having a chance to work with such incredibly talented people around you is sometimes intimidating but always super motivating. Being around that kind of company day in, day out, it pushes you to progress and improve. I’ve definitely learned a lot about myself since I joined.

Q: Can you give some insight into your relationship with Nuke? How and when were you first introduced to it?

A: I think it was back in 2009 when I was working at Platige Image as a VFX Supervisor/Compositor. I remember we were supposed to start a new film project with tons of 4K/2K plates from set, and massive render layers for crowd multiplications. I knew right away that the demands of the project would be asking a lot from the pipeline we had at the time.

I read a lot about Nuke as an internal tool at Digital Domain, so it had been on my radar for some time, and I’d heard so much about its legendary speed. I have this tendency for trying new stuff in my projects, and this was a perfect chance to bring that tool to the company and see what we could do with it. Moving to Nuke was like stretching your legs after a long transatlantic flight. There was so much power and new possibilities that I instantly fell in love with it, and I‘ve never lost that feeling since. The way it allowed me to work with colours, full luminance range, channels, and 3D camera projections was a revelation to me, and it changed not only the final outcome of the project but also me as a Compositor.

Nuke has this Swiss Army knife vibe to me; it can do so much. In the end, we managed to solve problems that did arise on set and I feel we delivered a great looking shot. I don't think that would have been even remotely possible without Nuke in action, and the decision to use it back then really saved us. Over the years I’ve learned to have full trust in—and developed sort of an addiction to—the software and I actually use it whenever I can.

Q: Nuke was used in designing the lighting and color in CDPR’s proprietary REDengine. Can you dive into this a little more?

A: Cyberpunk 2077 presented a serious set of challenges for our newly developed rendering engine. The enormous scale of the city, with its complex verticality, countless overhangs, locations with mixed lighting, dynamic day/night cycle and weather system—there was a lot to contend with! Night City posed a huge challenge from a data size perspective, so everything regarding lighting and weather had to be dynamic rather than baked in, as well as react to environmental changes. This approach is the one of the toughest you can take, but because prebaked solutions wouldn't cut it for us, this was the best path forward.

Coming from the world of film I have used a photorealistic approach to rendering for years, but this PBR correct pipeline and physically correct lighting was a totally new paradigm for CD PROJEKT RED. I wanted to introduce it fully for Cyberpunk 2077. In essence, I wanted to create a sandbox city where the use of physically correct lighting can provide a believable look to hundreds—if not thousands—of small open-world locations, whatever the time of day or weather state. In areas like these, where street lamps, neons, shop lights, the sun, and sky all exist in certain correct proportions, I really wanted to push the immersion by letting the player feel the passage of time: from the energy of scorching daylight to the vibrant colours of night slowly blending in through the dusk. Again, I was super lucky to be working with the amazingly talented Rendering team here at CDPR, who had the skill and drive to make that a reality.

Among other challenges, one of the trickiest parts was to create a global illumination system that would provide proper skylight, sun, and other light-source bounces to deliver realistic and dynamic lighting to each location—so it looks and feels just like you’d expect it to. The complexity of developing this system comes largely from the design and demands of the city itself. We can have a primary light-source bounce that is physically correct but everything after that first light bounce needs a mathematical approximation, one we need to calculate and present in real time. In the real world, light bounces countless times, filling even the smallest, most complex streets with some soft skylight. In Night City we have hundreds of those small, narrow, or very occluded streets all over, and tuning the engine to provide proper results in those edge cases, at any time of day, was far from simple.

To make things easier, I used Nuke and an external physically correct path-tracing solution to build a reference database. With that in place, we could iterate our work on the engine and check the solutions against the target. The fact that I could use Nuke more analytically as an iteration tool was a huge help as we could just fetch high dynamic range data from both engines, compare it regardless of colour, space, or exposure across the same locations. Precision was really key here. In the end, Night City is lit with a fully dynamic global illumination system that is capable of reacting dynamically to the smallest change of light source, producing proper, realistic lighting throughout. With ray tracing enabled it becomes a hybrid system that uses strengths of both standard global illumination and ray tracing to deliver an even more substantial lighting solution.

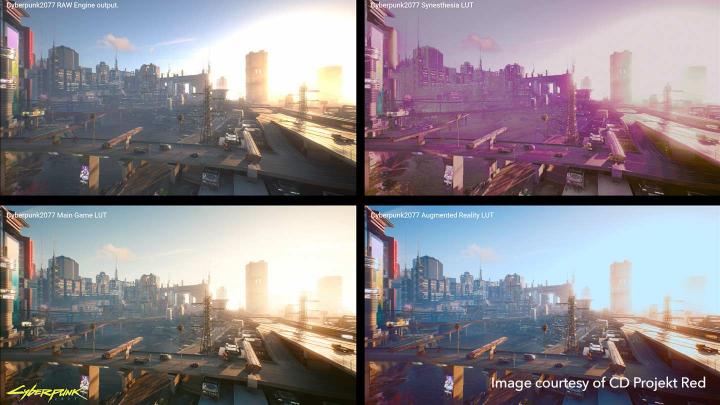

Another very important aspect of my work was the ability to create custom 3D LUTs. I think it is hard to ignore your roots, and I really wanted to treat Cyberpunk 2077 akin to a film shot to composite. And the Rendering team was on board with that approach. That is why instead of a typical tone-mapping solution we used a classic film LUT system. It was actually a custom *.cube file I created in Nuke for the main game, as well as special instances such as when seeing Night City as it was in the 2020s, using scanning mode, or in tank vision mode — to name just a few. I could play with many looks and color setups inside Nuke, and iterate many in-game locations at once, pushing them towards a balance between a more filmic look and gameplay specific needs. That gave me a lot of control and helped to address the various visual and aesthetic demands this super visually busy city has.

Q: How was Nuke used in the creation of Cyberpunk 2077?

A: Apart from the above-mentioned lighting applications, I personally found Nuke to be a perfect prototyping tool for game development. With its procedural nature and technical qualities, the scripts were very easy to analyze and reproduce using different tools later in the code implementation phase. With this in mind, one of my tasks was to establish the art direction for Cyberpunk 2077’s UI.

I started out with an amazing cooperation with fantastic designer Michael Rigley. He created a big set of elements that I could use as a base for the next design steps. These, along with some new ones I created using Nuke, allowed me to build a set of working UI mockups to solidify the overall vision. I was able to create a full system with animated layers showing how the in-game environment, NPC scanning, weapon widget, and the holo-calls system would look, work, and feel.

I am, and always will be, a fan of 80s electronics design mixed with HiFi-like culture of displays and data presentation. I wanted to implement the idea of a more physical, retro-like HUD UI design—one that would be projected via the players' cybernetic eyes. Most games use UIs that are in limbo between the player and game world itself. With Cyberpunk 2077, I wanted to add this beautiful and very functional layer of glass between the two, not to separate them but in order to merge them more seamlessly together using the cyberpunk setting to great effect. A lot of that initial draft was later used as a starting point in final game UI designs and implementation.

Nuke was also super helpful when prototyping certain full screen effects and using those visuals to communicate with FX and Tech Art teams. That is how I was able to design a handy visual mockup for cyberspace in a relatively short time—which I used as an example for other directors of its potential role as a more technical breakdown for the in-engine prototyping phase. I really feel like Nuke is a perfect tool for this kind of art research, as well as a cross-discipline tool to help teams communicate and iterate on ideas between each other. Nuke was also used in a more traditional sense during production to create some marketing materials and amazing short clips that you can find while exploring Night City.

Q: As a studio focused on creating highly-anticipated, acclaimed AAA games, tell me about the types of challenges you face, and how you typically overcome these. How does Nuke contribute here in your experience?

A: I think for these kinds of artistically and technically challenging projects, communication between interdisciplinary teams and smooth iterations are always tough to get right. We have a number of ways to make both of these smoother and easier to manage, one of which is creating a strike team that focuses on a feature and produces prototypes. But even with direct cooperation, ideas still need to be presented. This is where we leverage one of CD PROJEKT RED’s biggest strengths: the Concept Art team.

We have one of the most incredible concept groups ever, as it's hard to call it just one team. In fact, it’s a group of stellar artists spread among teams, producing countless concepts that are used to support design documents or production. The goal is always to solidify ideas and communicate them to all teams, starting from Design, through Content teams, and finally the Tech teams that need to create features for it. Problems can sometimes arise when an idea that needs verification is somewhere between the animation side, art, and tech research, as one image might not be detailed enough, or might not be substantial enough to verify an idea, for example.

I mentioned it before, but I find Nuke to be an amazing tool that provides the missing piece of this particular puzzle. I used Nuke to develop some technical solutions, test visual ideas that would otherwise be difficult to test in development, make some working animated prototypes to gather feedback early, and help the process overall.

Q: What was your favourite asset to create or develop whilst working on Cyberpunk 2077 - both inside and outside of Nuke?

A: I think one of the very fun assets I had the pleasure to work on was the Cyberpunk 2077 splash screen. I think when you make a game with such a big team you need to find any possible occasion to inspire everyone with the right vibes. It was super early in production, but I felt that with the UI Art Direction draft approved I could contribute here in this regard, too.

I wanted to create something that would be a striking marriage of a pure digital feel and a very down-to-earth physicality. I wanted to show the logo in its digital form placed on a heavily beaten asset, showing the brutality of the world of Night City. Using simple 3D compositing with an animated camera I created a multi-layered, deep digital screen presenting data streams with simple black ink-like Cyberpunk text projected on a very scratched and well-used plexiglass. At some point I also made a variant with a bleeding logo mimicking the visual distortion we can find on broken screens, but in the end we decided to keep the main logo silhouette clear. At the very beginning that black logo shocked people internally, as everyone was already used to the yellow and cyan one. Eventually they warmed up to it and no one felt like I forgot a layer there!

Q: What do you think the future holds for post-production techniques and tools in video game development, and vice versa—for real-time technology, game engines and virtual production in films and series?

A: I think we’ve known for years that games and films are two mediums reaching out to each other on many levels, both as an inspiration and as a source of technology exchanges. I think that the last three years — with the virtual production boom and massive level-up in the quality of real-time engines output, powered in part by the bloom of ray tracing — have made the final point to any disbelievers. The gains for both the film and animation industry are already visible with many full features made with the help of game engines and their pipelines — once exclusively used in game development.

Personally, I was totally blown away by The Mandalorian, its quality and the creativity behind it. I think it’s a good example of how some elements of classical post pipelines are being revisited today with totally new tools, and these are providing more freedom without compromising on quality — making them fully usable for the most demanding projects. I feel that this is just the beginning, too. Proof of concepts are being made with great results. I am personally a big fan of ray tracing being used in real time, and I feel the quality will eventually transform sets into creative sandboxes for directors and crews to find new ways of working. One thing I would be curious to see integrated into virtual production is Light Field, a technology researched and developed by my personal hero Paul Debevec. Having a way to blend “cuts'' of reality with fully CG environments in real time on set would be the next revolution.

I also see a huge benefit for both mediums as big portions of creative pipelines can overlap, meaning that working on franchises with a spectrum of projects will be so much easier, faster, and more coherent. But at the same time I feel that gains are still to be made purely within the games industry. The film industry review tools are blended into the production process with amazing seamlessness. I feel more traditional post pipelines were seriously optimized over the years in making this a much smoother process for films. Taking some cues from film could, for example, make design, technical, art, and gameplay reviews a much more efficient and complete process across the board.

I think games are reacting to expectations of today and are growing so much in terms of their content and quality. The same problems faced — and overcome — in other mediums in the past have started to arise have to be solved daily. Even though the same tools or techniques are already being used more and more in games, I feel that the big boom is still ahead of us. I am really looking forward to it.

Q: Are there any trends or technologies on the horizon that you’re particularly excited by, and will affect your studio or workflows in a beneficial way?

A: I am and always will be mesmerized by the reality around me. The world is so beautifully, magically sophisticated that through my whole life I’ve always searched for a way to imitate it. I believe that was always more attainable in film post production, but the game industry — with its technological limitations — has had it much harder. Even today, photorealism is not always adopted as the go-to style to consider when making a new game.

I feel that it’s slowly changing and, though it won’t happen overnight, we are witnessing the same revolution that happened to film in 1998 with the introduction of ray tracing into production when the amazing animation Bunny, by BlueSky Studios, was presented to the world. That one step totally changed the way films were made and the way they were looked at later on. It’s not just ray tracing, however.

Nowadays, there are technologies capable of adapting accurate, real-world references into a CG presentation which have the potential to dramatically level up virtual production quality. Technologies focusing on creation of CG characters have also seen amazing growth, and together with all new facial animation tools, this could make games even more believable in terms of presenting character emotions. Procedural creation tools used in asset and world creation will continue to help developers attain quality results at a fraction of the time needed in the past, too. All of this new software, as well as more powerful hardware, I believe will make a huge impact and shape all sorts of pipelines in the future, ushering in a similar change that took place 23 years ago for films — potentially even bigger — across many fields.