Foundry at GTC: A step-by-step guide to CopyCat

Last week saw NVIDIA’s virtual GTC conference for 2021.

Amongst the speakers was Foundry’s own Ben Kent, Research Engineering Manager & AI Team Lead, who was joined by Thiago Porto, VFX Supervisor/Senior Comp at MPC New York, as they dived into machine learning and CopyCat, new to Nuke 13.

While we’ve briefly discussed CopyCat before, Ben’s presentation gave a whole new view into Nuke’s new ML toolset, as well as an in-depth look at machine learning in the VFX industry.

Scroll for a full breakdown of the presentation, and a step-by-step guide to CopyCat—from its conception to how you can use it on your next project.

It started with an idea

Nuke is often seen as ubiquitous in the VFX industry. Part of that involves staying up-to-date and providing artists with the latest tech. That's why Nuke 13.0 saw the first implementation of machine learning—a goal that Foundry's A.I Research team was created for.

But where to start?

Ben and the Research team looked into producing a fairly conventional image-to-image-based machine learning tool, the norm for most digital software. As it is impossible to know what footage an artist might want to use, these networks tend to be pre-trained and more generic. For these to work effectively, they need a large dataset of images. This often means hundreds or thousands of images are needed to train a neural network.

A problem that arises with these tools is that they can produce what Ben describes as 'decent quality effects'. While there is nothing explicitly wrong with this, they're not the perfect outputs needed by VFX artists. Plus, there is the added chance that they won’t produce the desired effect at all, which can be a massive time-waster.

With all this in mind, the Research team continued to work on creating ML tools that were the right fit for artists. But, it wasn’t until Ben’s unexpected moment of machine learning enlightenment that they hit the jackpot.

How CopyCat came to be

The initial training template the team created was based on these conventional ML tools, mentioned above. This in-house tool would make it easy to train new neural networks and allow customers to easily experiment with machine learning inside of Nuke.

It wasn’t until Ben started to experiment with the template that things started to fall into place and the team found a better way of creating the ML toolset. Using a scene from his own film, Ben wanted to see what could be done with the ML model and attempted to deblur a few out-of-focus shots using only eleven small crops.

"Back in 2011, before deep learning was such a big thing, I spent months and months working on the state of the art traditional algorithms for deblur," Ben tells us in his presentation. "The results were never very good, so I was extra keen to try some deep learning magic on this particular example to see if it could do better."

On the surface, the idea seems far-fetched and Ben had no expectation that it would work—it was merely an experiment. But to his surprise, it did! The shots were deblurred from just a few sample images and presented Ben with some important information that was vital to the creation of CopyCat.

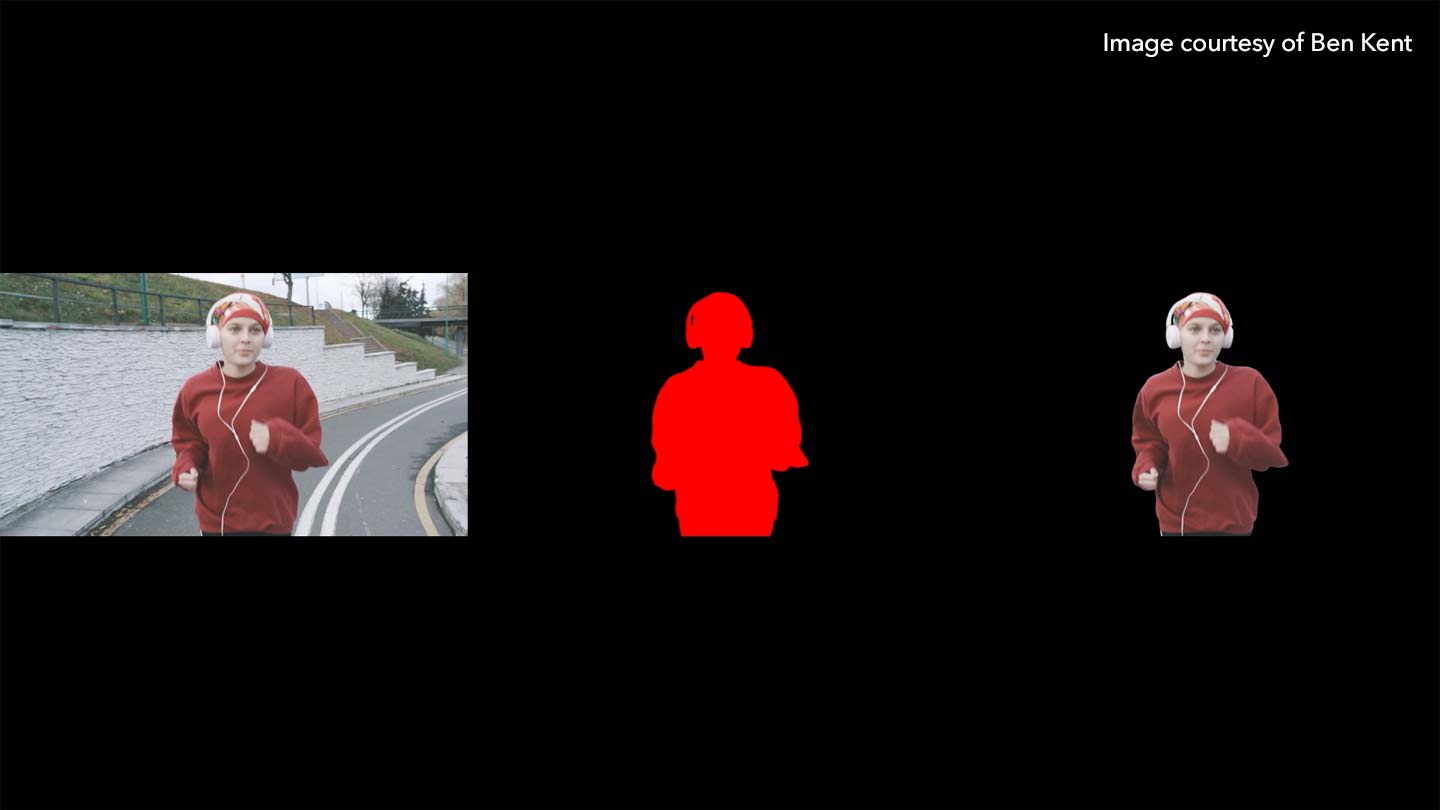

Before and after image of a shot from Ben's film. The image on the left shows the original shot, the image on the right shows the shot after it's been run through CopyCat for deblur.

With conventional machine learning the user expects instant results, and while these might be lower quality, for them that’s predominantly what they need. Whereas, VFX requires a higher attention to detail and quality in order to reach the standard that audiences expect, which is why “machine learning in VFX doesn’t fit the conventional wisdom we were working to,” Ben iterates in his presentation.

CopyCat offers this fine-grained control and allows artists to train their own neural network specific to the shot they’re working on. It isn’t a generic or pre-trained tool, instead, artists can tailor it to their needs and create whatever effect they want for their shot—there is no limitation to what they can use it for.

The inner workings of CopyCat

CopyCat aims to put machine learning into the hands of artists. Every VFX shot presents its own unique challenges, requiring the creativity and ingenuity of the artist. While pre-trained tools do help to an extent, sometimes artists need an effect specific to what they’re working on.

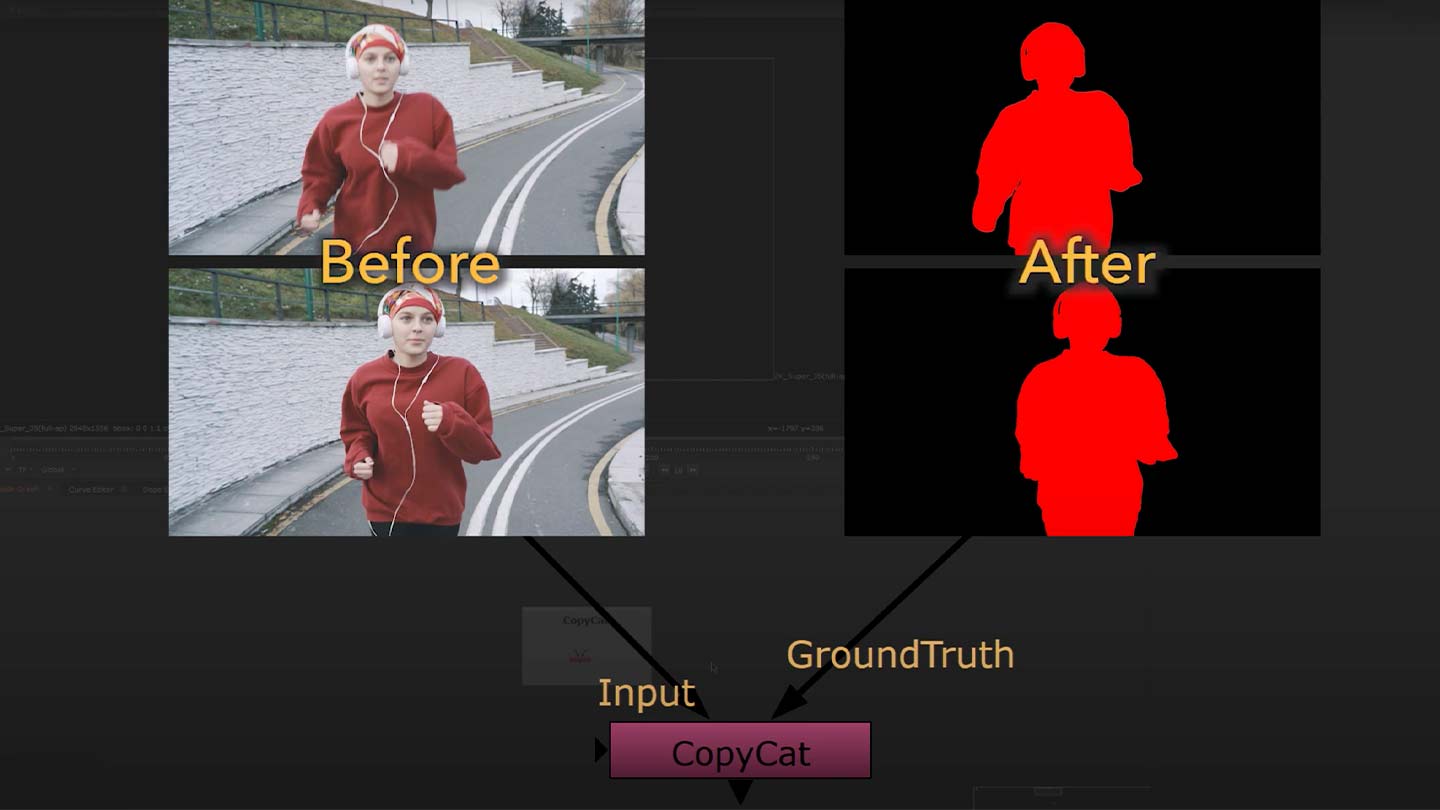

With CopyCat, artists can train their neural networks for whatever effect that they need for their shot or sequence. It also doesn’t require huge datasets; instead, CopyCat only needs a small set of before and after images which the neural network can then learn from and produce the effect needed.

Alongside CopyCat, Nuke 13.0 also introduced the Inference node which works as part of the ML toolset. The Inference node runs the neural networks produced by CopyCat. Once the network has been successfully trained by CopyCat, its weights will be saved in a checkpoint. This can then be loaded in the Inference node and applied to the remainder of a sequence.

Together, these tools allow artists to deblur or upscale shots, make beauty fixes, and more. So much so that Matty McDee, a Compositor at FuseFX, believes that: “Nuke’s ML CopyCat node is going to impact beauty work in the way that SmartVectors did [...] I'm very excited."

What it can do hasn’t been defined by the Nuke or Research team—it’s up to the artist to create what they need.

Of course, it wasn’t all plain sailing. Machine learning tools can be unpredictable, as Ben says in his presentation: “ML training can be as much an art form as it is a science.” It is also a cutting-edge technology and there are new versions coming out every few months; keeping on top of these can cause a large amount of overhead.

For any artists that aren't ML wizards, training neural networks can be scary. That’s why, the research team worked with the design team to produce an easy-to-use, artist-friendly interface for CopyCat. Alongside this, our content team has created a whole host of tutorials which you can find on our Learn site. These provide an in-depth breakdown of how to use Nuke’s ML toolset.

There are more improvements and extensions that the Nuke team, Ben and the Research team are working on, so Foundry can provide the best possible ML solution inside of Nuke for artists.

Now it’s your turn

The A.I Research team was created for a reason: to integrate machine learning into Foundry tools, and they surpassed all expectations. CopyCat and the other machine learning tools found in Nuke allow artists to discover new ways of approaching shots, and most importantly, it puts ML into the hands of your imagination.

In his presentation, Thiago took us through a real-life demonstration of how you can use CopyCat and how to quickly compose shots—just one of the many ways it can be used. We’ve seen a great response and have been inspired by how artists are using CopyCat and the beautiful shots that are being made.