CopyCat: bringing machine learning into Nuke’s toolset

It’s no secret that machine learning (ML) has been on the rise in the visual effects (VFX) industry for the past few years. With its increased popularity comes an opportunity for a more streamlined and efficient way of working.

This is especially important in the changing climate, with schedules getting tighter and projects becoming more complex. Artists are met with fresh challenges and need new ways of working and tools that can keep pace.

ML is a saving grace for many and is becoming a crucial part of VFX pipelines everywhere.

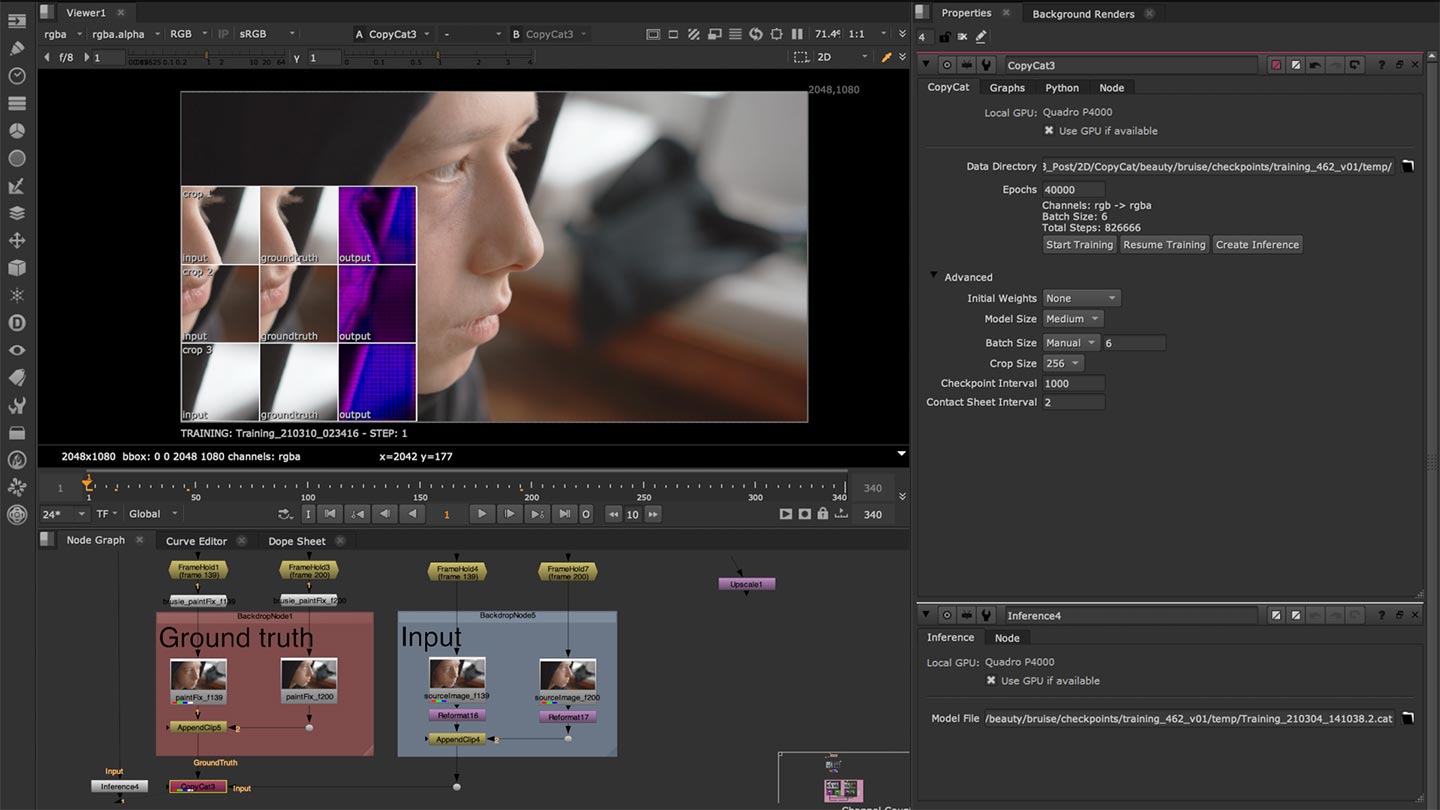

With this in mind, Foundry, as part of the recent Nuke 13.0 release, has integrated a new suite of machine learning tools including CopyCat—a plug-in that allows artists to train neural networks to create custom effects for their own image-based tasks.

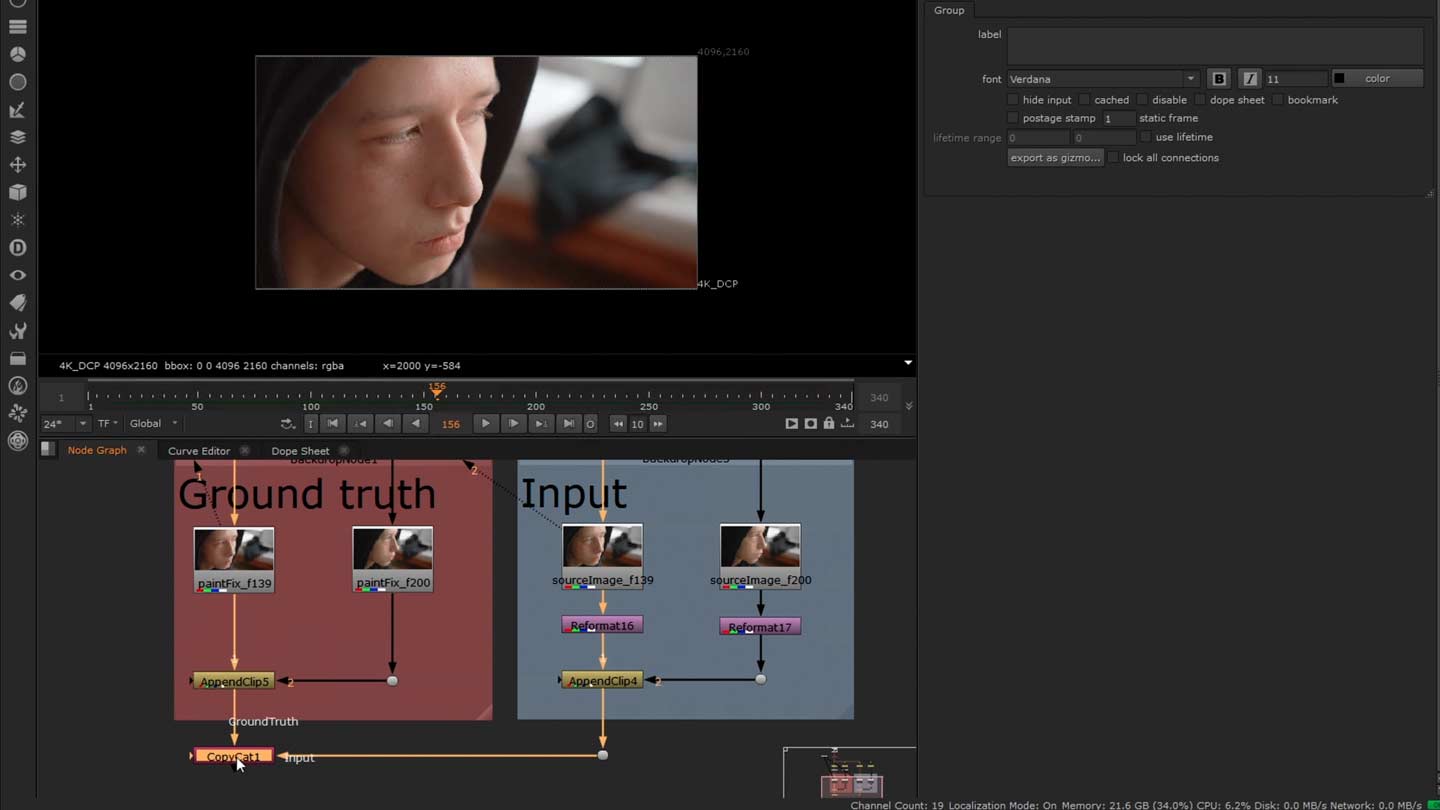

CopyCat is designed to save artists huge amounts of time. If an artist has a complex or time-consuming effect such as creating a garbage matte that needs to be applied across a sequence, an artist can feed the plug-in with just a few example frames. CopyCat will then train a neural network to replicate the transformation from before to after, this can be used to apply the effect to the rest of the entire sequence.

We take a look at this exciting new toolset and chat with Ben Kent, Foundry Research Engineering Manager, and A.I. Research Team Lead, to find out more.

A lightbulb moment

In the beginning, the initial ML Server created by Foundry’s research team was meant to allow customers to easily experiment with ML inside of Nuke, as well as to provide an internal tool for quick network prototyping. Little did the team know it would inspire a whole new way of looking at machine learning in visual effects..

Conventional wisdom suggested that the ML Server would need a large database of images to learn from, roughly tens of thousands, in order for it to be effective. From this dataset of before and after images, the ML Server would then train a neural network to do whatever task the user asked of it, like deblurring an image.

It wasn’t until Ben started to test the ML server did his mindset quickly change. Whilst figuring out how to start a training run, Ben experimented on an out-of-focus shot from his own film, using just eleven small crops in an attempt to bring it back into focus. With such a tiny dataset he had low expectations of the outcome—to his surprise, it worked.

“That's when it kind of occurred to me that visual effects isn't like general machine learning,” Ben tells us. “With normal machine learning, you have to use these broad data sets because you never know what footage the user's going to want to use it on. The user expects instant results, possibly running on low-performance hardware like a phone and there's no guarantee they're very skilled or tech-savvy [...] it's actually completely the opposite in visual effects.”

While the underlying technology remains the same, in VFX we can leverage the skills and knowledge of the artist to help train the network, rather than relying on generic pre-trained tools. Even though the results may take longer, artists would rather spend the time training specifically for their shot, knowing that they’d get the best outcome possible.

It was from this realization that CopyCat was born.

What Nuke and ML means for your workflow

CopyCat forms part of the machine learning toolset that has been integrated into NukeX. The main aim behind the CopyCat node is to enable artists to use the power of machine learning to create bespoke effects and tools with a high-quality result in relatively little time.

By using CopyCat, artists can train neural networks to complete a whole range of tasks using only a small number of reference frames. They can then train the network to replicate the effect and implement it across similar sequences for whole shows.

What’s more, the user-friendly tool allows artists to work easily at scale and comes equipped with a streamlined interface and graphs, so it’s easier to monitor the ML training properly. Hendrik Proosa, CEO & VFX Supervisor at Kaldera, comments:

“CopyCat is a very promising new addition to Nuke! An expandable, yet artist-friendly toolset rather than fixed "magic button" approach to bringing machine learning to VFX. CopyCat experiments have incited quite a lot of excitement in the Nuke community and there are great new ideas and solutions are already emerging.”

And Rob Bannister, VFX Supervisor, BannisterPost, agrees:

“In the short time I've used the new CopyCat node, it has already saved me from a few long nights, and helped meet some tight deadlines. The new ML toolset is great and helps reduce redundant work like creating mattes. I’m able to focus on "one-off" shots or look development while training a network in the background to perform the same work on similar shots in the sequence.”

The ability to create and apply your own high-quality, sequence-specific effects is a big step for artists and allows you to have more creative control over your work as well as save huge amounts of time.

“Unlike canned machine learning tools,” Ben tells us, “CopyCat gives freedom and flexibility to artists by allowing them to tailor machine learning to their specific needs and is limited only by the creativity of the artists and not the people who designed the tool.”

The future for ML and Nuke

CopyCat isn’t the only ML tool to be implemented into Nuke and NukeX. The Nuke 13.0 release also saw the addition of the Inference node, which works in conjunction with CopyCat.

The Inference node runs the neural networks produced by CopyCat. Once CopyCat has successfully trained a network, its weights will be saved in a checkpoint and this can then be loaded in the Inference node and applied to the remainder of a sequence.

Nuke 13.0 also introduced two other new nodes to help battle common compositing tasks.

The Upscale and Deblur nodes are optimized using the same ML methodology behind CopyCat. The Upscale node allows artists to resize footage by x2, while the Deblur node removes motion blur from footage and is ideal for reducing blur from stabilized footage. The Upscale and Deblur models are pre-trained and don’t require CopyCat, but they can be used as the basis for further training using CopyCat.

All in all, it is an exciting time for Nuke and machine learning—and this is only the beginning. The Research and Product teams at Foundry are continually working hard on innovative uses of machine learning within our products and looking at how to make the artist experience the best it can be.

We’ll leave you with the words of Richard Servello, Senior Compositor and Compositing Supervisor, who has been testing the machine learning toolset extensively since the Nuke 13.0 release:

“Machine learning in Nuke is the first tool that isn't specific in its purpose—it's the most open-ended tool ever created for production. It can be a roto assistant, a paint assistant....it can even be an animation assistant. I can't wait to see the creative uses it will bring, and I look forward to really utilizing it and seeing it grow. This is an exciting time for VFX” he continues, “we’re about to enter the next phase that does away with a lot of the technical hurdles and paves the way for much more creative solutions to problems!”