Machine learning for artists: the latest trends

Machine learning (ML) is one of the hot topics in the world of visual effects (VFX) at the moment. Over the past few years, we’ve seen the impact it’s started to have on the VFX industry and the technology that has emerged because of it.

In fact, it almost seems like there is a new ML tool or program being released every other week, all promising to improve the way artists work, create and collaborate. So, amongst the many, what are the key upcoming tools that you, as artists, should watch out for?

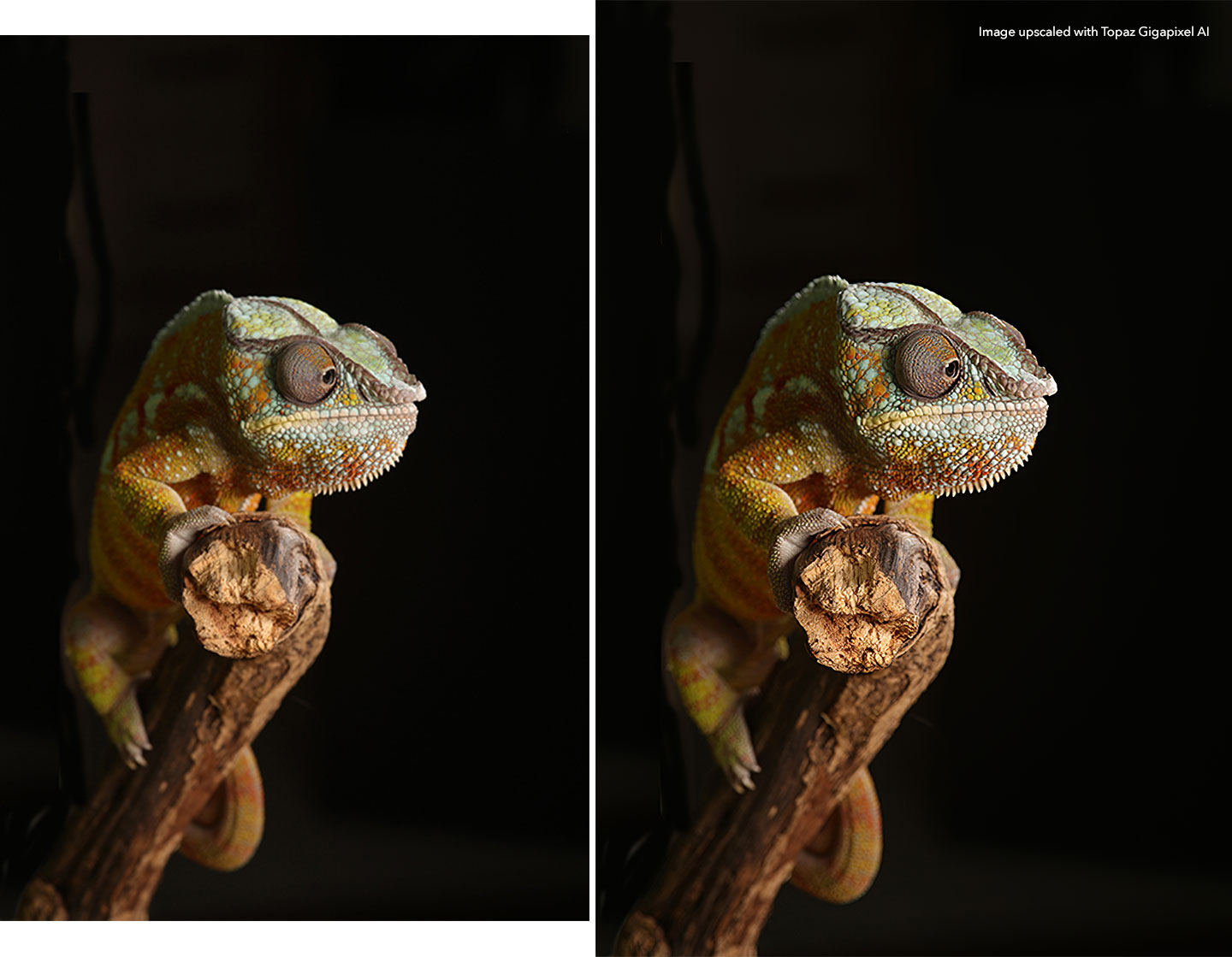

Upscaling your footage

Upscaling or up-res is no new phenomenon, and involves increasing or stretching a video to a larger resolution. This process can take a long time with the quality of the results varying and artists often having to ‘clean up’ the footage aftwards, removing any blurriness or noise.

But recently, with the aid of ML and artificial intelligence (AI), the process has been set to dramatically improve by lightening the more arduous aspects of it.

It works much in the same way as other machine learning algorithms. Using training data it has seen previously, it takes a low-resolution image and creates a new version, which looks the same but contains more pixels. Not only does this method get the job done quicker, but often the quality of these results are higher as well.

What’s more, working with lower resolution footage dramatically reduces render times when compared to high-res footage like 4K. This ML upscaling process enables artists to work with lower footage—when required—and then upscale to a higher resolution when the project needs to be delivered.

There has also been developments in using machine learning to denoise footage and images. Much like the up-res process, the ML algorithms learn what noise is from data it has been given previously. This then produces a new, clean image. These two ML processes can work in tandem, producing higher quality images in a shorter time frame and giving artists time to concentrate on more creative things.

These ML programs are also proving to be popular amongst the gaming community, with enthusiasts using the method to upscale the graphics of old school games like Doom and Final Fantasy. For this, programs like GigapixelAI have helped modders to speed up the process and achieve high quality results. While these programs aren’t instantaneous and require some hard work, the results of the revamped games and TV shows have been incredible.

New Digital Faces

It’s likely that you’ve already heard of one of VFX’s most popular trends—digital humans and deepfakes. The technology has taken the industry by storm, with films like Gemini Man and Captain Marvel only adding to the fire. It comes as no surprise, then, that we continue to see more advancements in this area, especially related to machine learning.

One of the newest developments in the world of digital humans is face replacement. The method involves replacing the face of a person with another version of the same face—different from the deepfakes trademark of putting a different face on a different body.

The method stems from video dialogue replacement created by CannyAI, and uses neural rendering to build a scene representation of the face. The accuracy of this ML method is amplified by it being the same face that’s placed on the new image matching its shape and form, as opposed to being a completely different image. An example of this can be seen below in an advert for Sodastream, which was dubbed into Hebrew using CannyAI.

Face replacement is a great method for dubbing TV shows and videos—as you can see below—and allows studios to reuse existing footage, or means if there is a mistake it doesn’t require a reshoot. This saves both time and money, and allows artists to change how the face looks and moves, without having to create a whole digital human.

There have also been developments in high-resolution 3D human digitization thanks to machine learning and deep neural networks. When it comes to creating 3D image-based humans, there are still limitations with the process—as this research notes—and they often don’t present the same level of detail as the original input images.

To create accurate predictions for these 3D humans requires a large amount of context, but in order to make the prediction precise, it requires a high resolution. The research highlighted above aims to solve these conflicting requirements of the 3D method with the help of machine learning.

The research team formulated a multi-level architecture that is trainable end-to-end and provides more context. This, in turn, estimates higher detailed geometry by observing the higher-resolution images. The results speak for themselves, with the 3D models being highly detailed and a closer match to the source image.

Machine learning developments like these hold big promises for the future of the VFX industry. If artists can spend less time crafting and perfecting the finer details of 3D humans, it means they can focus on the more creative aspects that come with creating a digital human. They don’t have to spend hours on the manual process as machine learning can help them.

It’s clear, from all the advancements happening with ML and digital humans, that they’re here to stay. As machine learning continues to develop, so will the quality of the digital humans that artists can produce—face replacement and advanced 3D human digitization are a testament to that.

Advance your Rotoscoping

Rotoscoping is a vital part of an artist's arsenal and an essential part of the digital post-production process. So, machine learning's ability to automatically generate mattes proves to be a life-saver for many artists. But even since we last dived into the intricacies of machine learning and rotoscoping, the developments in this space have continued to advance.

The main problem with rotoscoping is that it’s time-consuming, alongside being manual and largely non-creative. ML rotoscoping tools aim to optimize this process and diminish the time artists have to spend labouring on it.

Foundry’s own R&D project, SmartRoto, is just one of the tools that wants to update the rotoscoping process and make it easier for artists. Its aim is to design, develop and demonstrate intelligent tools for high-end rotoscoping of live-action footage, and minimize interaction required by the rotoscoping artist while keeping a familiar and intuitive user experience.

The research team also wants to investigate the sharing of machine learning networks and datasets between industrial and research communities. The ability to share huge datasets from real world productions has the potential of significantly accelerating the research and development of machine learning.

SmartRoto, and rotoscoping tools alike, are set to change the game of rotoscoping and the VFX industry. It allows artists to take a step back, and funnel more energy and creativity into other parts of their work, rather than spending hours meticulously rotoscoping.

Looking to the future

With tech advancing rapidly and schedules getting tighter, new challenges continue to emerge, especially at the expense of artists. It’s exciting to see what the future holds for VFX and how machine learning continues to bring new ways of working, whilst lifting artists’ creativity and productivity, alongside alleviating them from tedious manual processes and further amplifying their workflows.

Stay tuned for Foundry’s latest machine learning advancements, from exciting developments happening with SmartRoto to new projects we currently have in the pipeline—you won’t want to miss it!

Get the latest industry insights straight to your inbox with our quarterly newsletter