SmartROTO: enabling rotoscoping with artist-assisted machine learning

As technology advances, machine learning (ML) continues to make waves across industries—not least visual effects, animation and content creation, which each benefit from the time-saving qualities it promises.

It’s Foundry’s firm belief that ML is about accelerating artists; helping them achieve results they couldn’t before and getting to final creative faster by removing drudge work. On this line of thought, ML works best when artist and algorithm both work in harmony rather than in contention.

That’s why, back in 2019, we set out on project SmartROTO accompanied by leading global visual effects company DNEG and the University of Bath (UoB). The project aimed to speed up the rotoscoping process—traditionally laborious and time-consuming—via artist-assisted machine learning.

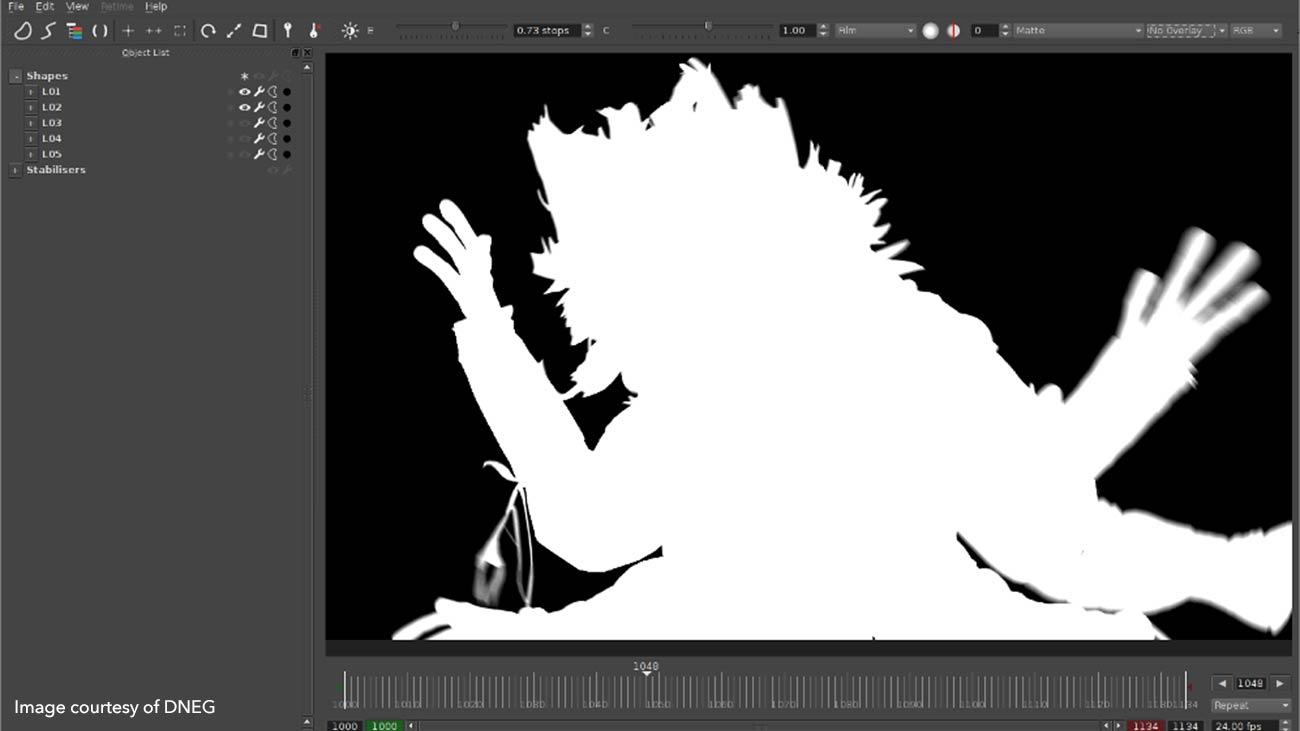

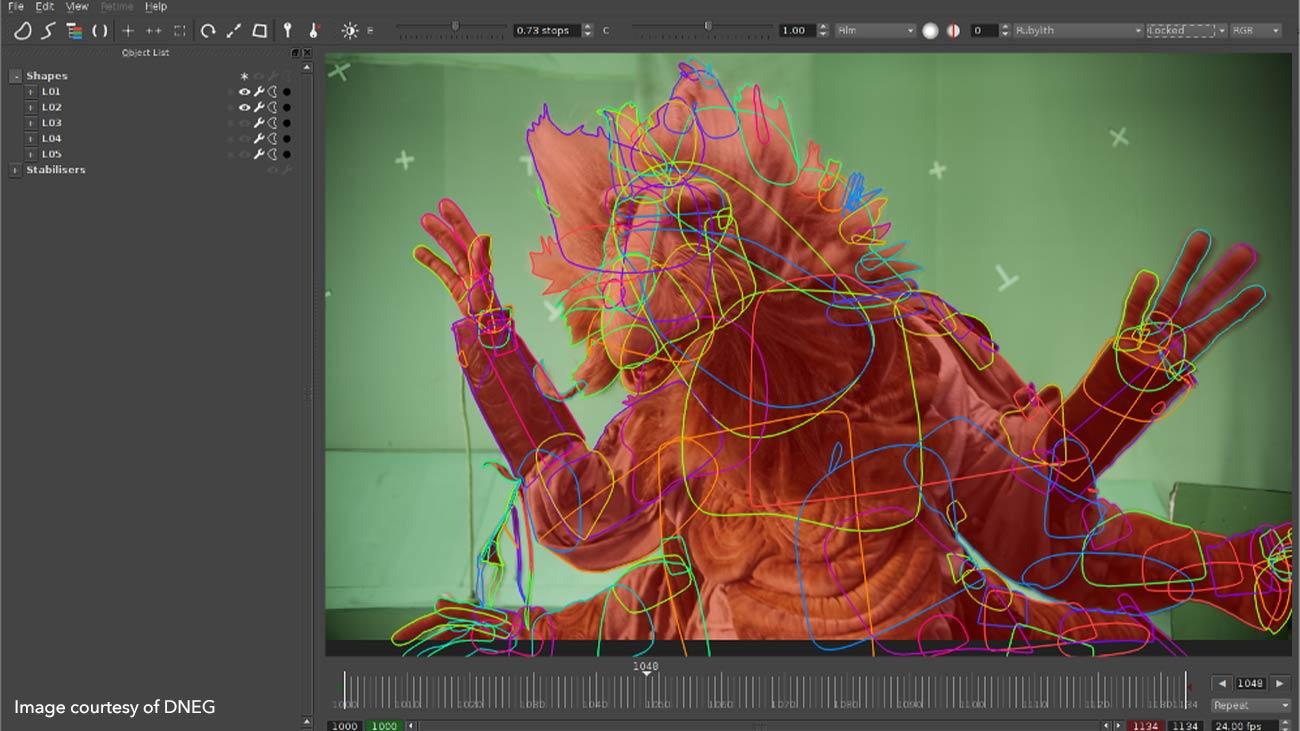

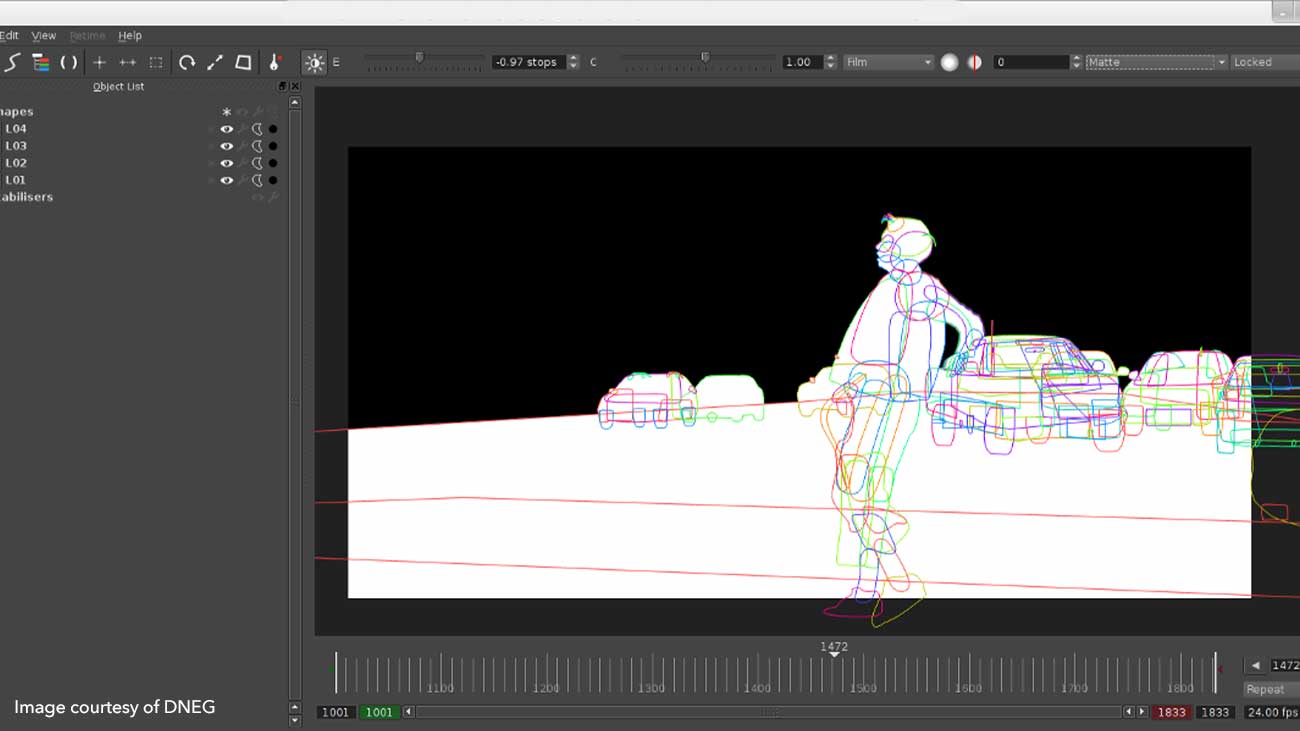

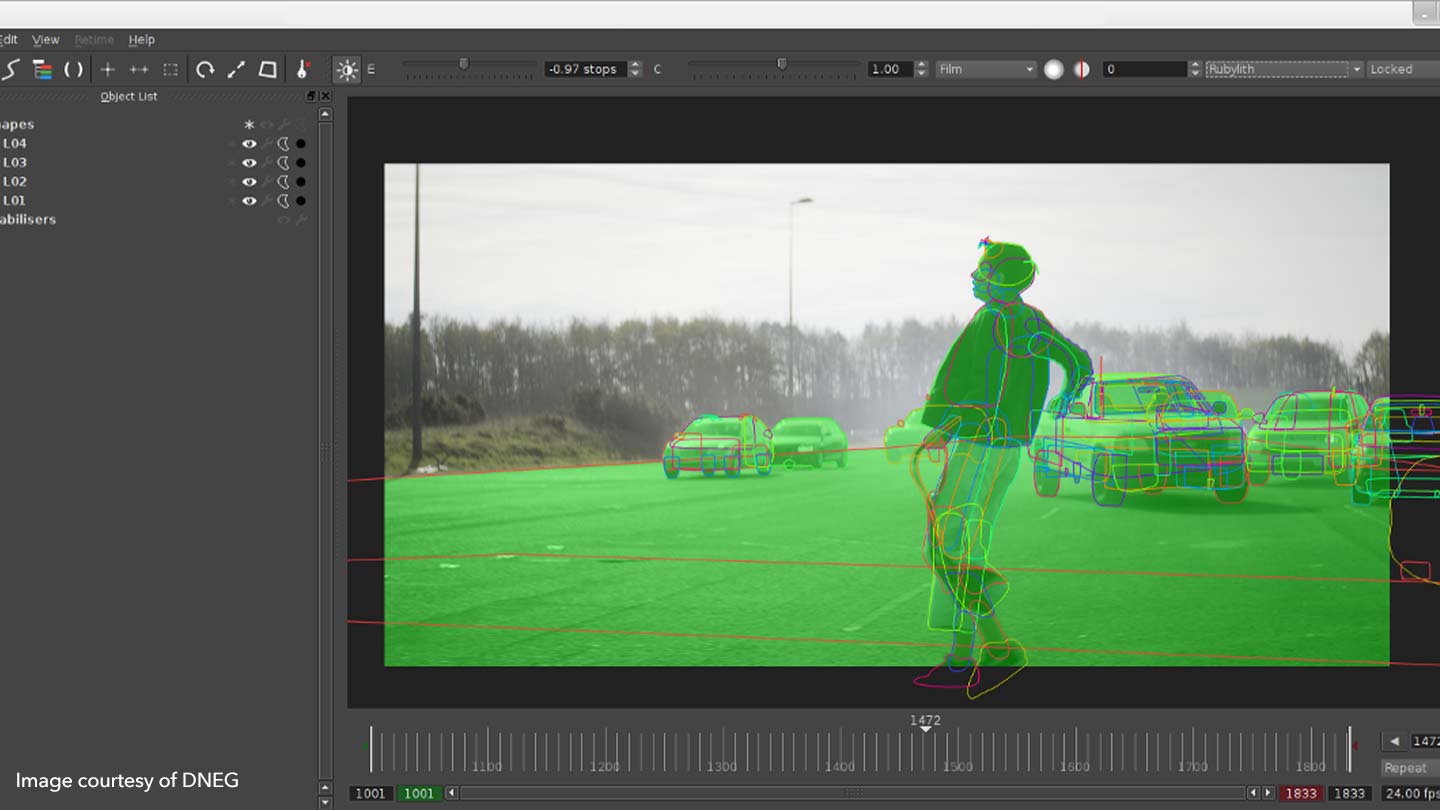

The idea was that artists would create a set of shapes and a small set of keyframes, and SmartROTO—specifically, the ML tech behind it—would speed up the process of setting intermediate keyframes across the sequences.

The project was not without its challenges, particularly as modern machine learning has shifted the focus from a machine-centric approach to a data-centric one—so the success and performance of the tool is almost entirely dependent on the quality, diversity and size of this data set. Deploying ML tools in VFX pipelines, data sharing and artist participation were just a few challenges that the project navigated in the hope of ultimately solving.

Two years later, as SmartROTO wraps, what have we learned, where did these challenges leave the project, and what’s in store for the future of ML and rotoscoping? We caught up with Ben Kent, Foundry’s Research Engineering Manager, plus key members of DNEG and the University of Bath to explore all this and more.

Lessons learned

Having worked on the project since its launch in 2019, Ben is a key member of Foundry’s A.I.R (AI Research) team and is perfectly placed to provide insight into SmartROTO’s progress and evolution over the past 24 months.

“It’s been a great learning experience,” he tells us. “The principal learning is that rotoscoping is very hard and that people are going to be involved, certainly for the foreseeable future. There's a lot of considerations in the rotoscoping process that need to be taken into account before starting out on a project like SmartROTO.”

“For example, artists don't necessarily put all the vertices and edges of shapes along corresponding image features and edges; they may just decide to put the middle of the shape elsewhere, resulting in various edge cases that become harder to track. This is compounded by other difficult cases such as rotoscoping motion-blurred objects, and shapes being occluded during parts of their lifetime.”

“Ultimately, this culminated in the realization that if you want to keep in-line with artists, you need to work around how they work. As a result we learnt a lot more about how artists really work.”

Speaking of how this impacted the progress of the project, Ben continues: “The overall approach we've ended up taking is pretty similar to what we planned to do at the beginning. It's still a machine learning-based tracking and shape consistency model; we’re still imagining the user sets up their initial shapes and a few initial key frames. With SmartROTO, we’re then using this model to predict in-between keyframes, better than interpolated or tracked keyframes, to try and reduce the amount of time they spend having to finesse in the middle.”

“Our opinion is that if we could save even 25% of an artist’s time, that would be really valuable, because rotoscoping is such a ubiquitous task in visual effects.”

The importance of teamwork

Both DNEG and UoB had critical roles to play in achieving this aim, and in the overall development SmartROTO.

As a major VFX studio with a large, dedicated roto team, DNEG was uniquely positioned to provide a truly huge data set of real production roto artwork for the learning algorithm. The data set included over 650,000 artist animated shapes consisting of 125 million user keyframes. When it came to rotoscoping as an artistic process itself, the studio brought a deep wealth of experience and insight to understand how SmartROTO could provide a superior workflow and better quality of life to the specialist rotoscope artist.

Overseeing DNEG’s involvement was Ted Waine, R&D Supervisor. “By playing a lead role in specifying the feature set and UI/UX of the product, it's been rewarding to help direct the project as well as delivering that all important data set and the API's to access it,” he tells us.

Asked what impact a project like SmartROTO could make on the industry, Ted is quick to lend his thoughts. “By producing faster and more intelligent roto tools, we can accelerate the turnaround on delivery of approved roto mattes and hopefully make the process more enjoyable and less laborious for the artists themselves,” he comments. “This would lead to direct cost savings and crucially a positive impact all the way through downstream tasks that depend on roto work.”

Collaboration was a core component of the SmartROTO project to see it through to fruition, leading to an engaging, holistic research environment for everyone involved.

“Working with the researchers and engineers from University of Bath and Foundry has been inspiring,” Ted comments. “Their comprehension of this problem space and the mathematics at the core of ML techniques is really impressive and watching their approach to developing a working solution has been great.”

His point is echoed by Neill Campbell, Reader in Machine Learning and Visual Computing at UoB: “Working directly with the research teams at Foundry and DNEG is a great experience as it results in real sharing of ideas and understanding—we can work out how to address the relevant and challenging problems that are faced by the industry and bring in new and exciting technologies to solve them, whilst collaborating directly with the end-users to ensure that we present results that make their lives easier.”

UoB focused on two main areas of the project. The first involved contributing to the technical design of the system, including how to exploit the latest machine learning models and work them into existing workflows and pipelines.

The second focus area was an aligned study to investigate how to develop machine learning systems that can be trained on datasets whilst protecting privacy and/or IP constraints on the original images.

“For example, we want to train on databases of images to improve a specific task (such as segmentation or tracking objects) without compromising the raw training images—in other words, we cannot share the images from the shots since they may be protected by IP agreements,” Neill comments. “As part of this study we have come up with some proposals for modifications to standard training procedures that would allow machine learning results to be shared externally (e.g. between companies) without compromising the IP restrictions for either company.”

The question of data

UoB’s work in the IP and data sharing space is crucial to the success of a project like SmartROTO. To enable machine learning capabilities in processes like rotoscoping, studios need ready access to huge datasets, since the quality of results is dependent on the amount, diversity and quality of the data the system is trained on. Solving this issue formed a key pillar of SmartROTO.

“One part of the project was about anonymizing data, so studios could potentially share it in a centralized server—they’d train locally and update the weights in a centralized place,” Foundry’s Ben Kent explains. “And though it's extremely hard to fully anonymize the transfer, one potential outcome might be a future where studios are given the tool to train their own networks, along with the engineering to do SmartROTO. It's almost like an empty black box that they then fill in with their own data. Ultimately, in all this, it’s about finding a solution that works for everyone.”

Neill Campbell is hopeful that reaching this solution is only a matter of time, a belief bolstered by the work done by UoB: “What’s exciting is that we have a slightly different setup whereby we are concerned with concealing the specific example images used to train an AI to perform a task. Because there are many different ways to perform a task, our goal is to pick one of the approaches that performs well across a wide range of images, not just an approach that only performs well on the ones we were shown as examples.”

“If we achieve this, then we actually meet both goals of concealing the precise training images (you cannot work backwards through the training process) without sacrificing performance,” he explains. “This theory work on privacy is only the tip of the iceberg and has opened up a number of avenues that we are applying for research grants to continue to work on in collaboration with colleagues in Mathematical Sciences. As new results come in, we hope to translate these into practical implementations that we can put to use with industrial partners.”

Alongside the IP and data sharing issue, deploying an ML framework into a VFX pipeline typically throws up its own set of challenges. Traditionally, software release cycles, GPU cost and a lack of interactivity of the ML networks being deployed has made their integration into pipelines tricky.

Yet some headway was made in this regard with SmartROTO, as Ben notes with pride: “With SmartROTO, we’ve got further with the algorithmic side of this and we've really refined what we think it should be.” The result was to pivot to PyTorch—a machine learning framework—which makes it simpler to deploy ML in VFX pipelines, and led to the development of tangible ML features in Foundry tools.

Whilst it’s yet to be integrated with SmartROTO, PyTorch underpins the ML toolset that shipped with Nuke 13.0, made up of several time-saving tools such as the CopyCat and Inference nodes. Both enable artists to harness the power of machine learning to create and apply their own high-quality sequence-specific effects. Read more about how CopyCat can accelerate your post-production workflow in our step-by-step guide.

Looking ahead to a smarter future

With all the above in mind, where does this leave the SmartROTO project as it wraps?

“We need to look at the results to see whether it's worth carrying on with now or sometime in the future,” Ben comments. Yet even if the results are overwhelmingly positive, productization of SmartROTO is still a long way off, he notes: “There's still lots more work to be done—retraining, for example. Automating the rotoscoping process—and rotoscoping itself—is very difficult, and we’re not quite there yet. But we know how important it is for artists, and the time-saving potential it holds, so my hope is that we’ll solve it in the future. Rotoscoping is so ubiquitous in VFX we definitely want to revisit it.”

“The main lesson we’ve learned is that rotoscoping is extremely hard. Machines certainly aren’t replacing artists any time soon. And even though we’ve got something that works with SmartROTO, it’s not currently robust enough for an artist to rely on—and artist experience is paramount for us.”

Guillaume Gales, Senior Engineer and project lead on SmartROTO, echoes Ben’s point: “It’s a challenging problem for which a solution can have a true impact on the VFX community. It would allow artists to spend more time on creativity and less time on tedious work. This makes this project very exciting to work on.”

“SmartROTO will definitely be a valuable tool where conventional approaches fall short,” he continues. “With GPU’s always being more powerful, we’ll reach a point where SmartROTO will integrate itself seamlessly with rotoscoping applications like Nuke or Noodle.”

Combined, Foundry, UoB and DNEG are excited to continue collaborating in order to take SmartROTO a step further. “Through our research centre CAMERA we will be continuing to work on follow-on machine learning projects with Foundry and DNEG and are looking forward to incorporating our new research into future workflows in established pipelines, but also new areas such as virtual production,” UoB’s Neill Campbell comments.

Ted Waine of DNEG also looks forward to the future: “DNEG's research team, which also has expertise in developing ML algorithms, may be able to continue to refine and iterate on the design. We hope to continue to interact with the other partners to this end and continue development.”

The story behind SmartROTO stands as part of Foundry’s ongoing efforts to make ML accessible to artists by taking complicated algorithms and making them available to solve hard technical problems in an artist-friendly way.

As mentioned above, we’ve integrated ML into Nuke 13 to continue empowering artists by giving them full creative and technical control and accelerating the process of getting to the final pixel-perfect image.

Whilst Foundry’s research teams work hard to make SmartROTO a reality, get stuck into CopyCat with Nuke today and experience the potential of ML-accelerated workflows for yourself.