Bridging the Real & Imagined

The news is out: virtual production, and its promise of faster creative collaboration on-set, is in.

We’ve talked about it ourselves to great length, as have others—it was the hot topic at February’s HPA Tech Retreat—and there’s little wonder why. Virtual production, as a set of several cutting edge technologies used in pre-production, enables greater creative freedom on-set that goes some way to connecting teams and artists working across pre-production and post-production.

It comes at a good time, too. Amidst rising audience demands for high-quality content, delivered at break-neck speeds, studios are being driven to think about how best they can be optimising their workflows using virtual production techniques. The ultimate aim is to give filmmakers and directors a better view of the final picture to make more informed decisions on-set—where time matters most.

But where unbridled creativity and blue-sky thinking are the order of the day earlier on in the production pipeline, things become more structured and technical as workflows and pipelines progress towards post-production—yet demands on creativity remain just as high.

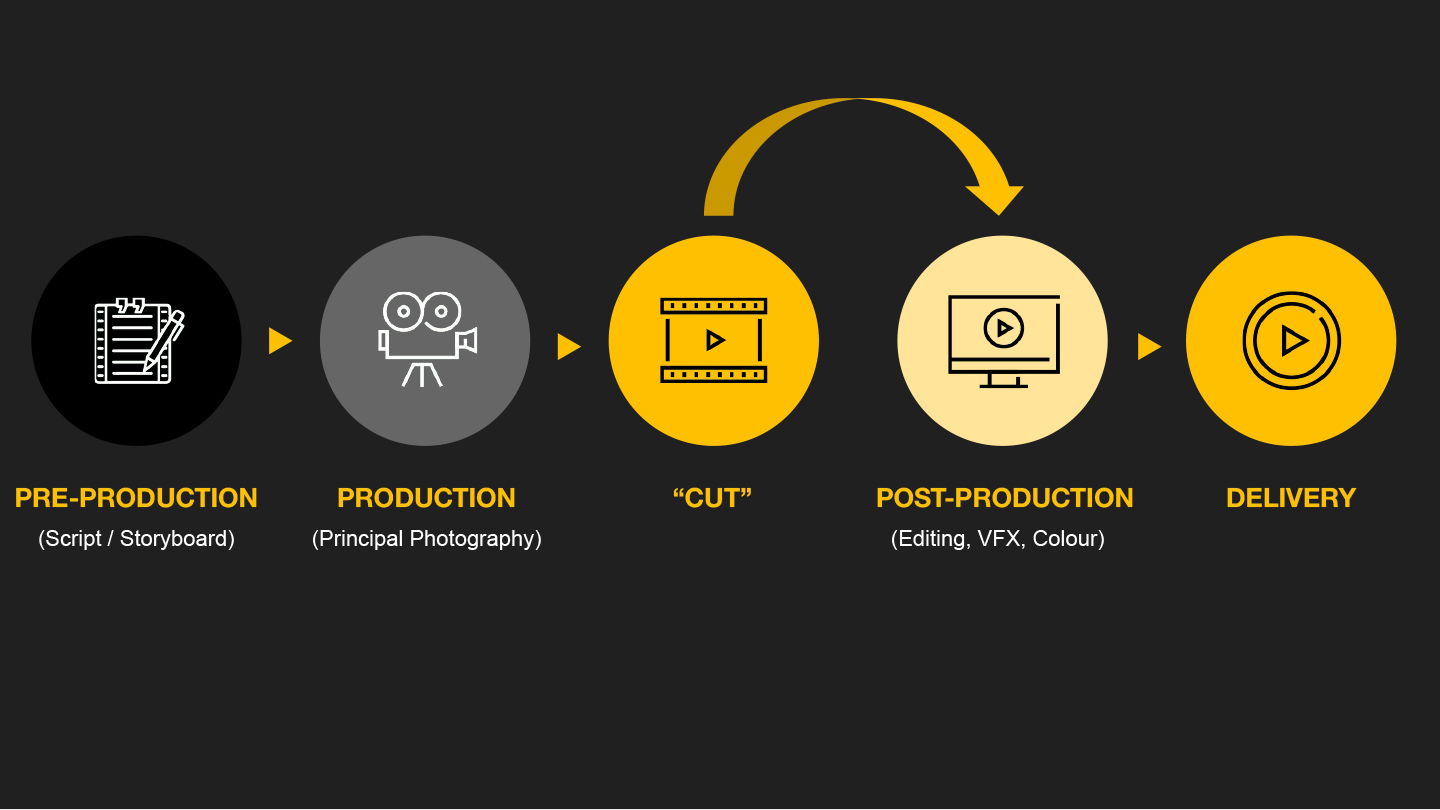

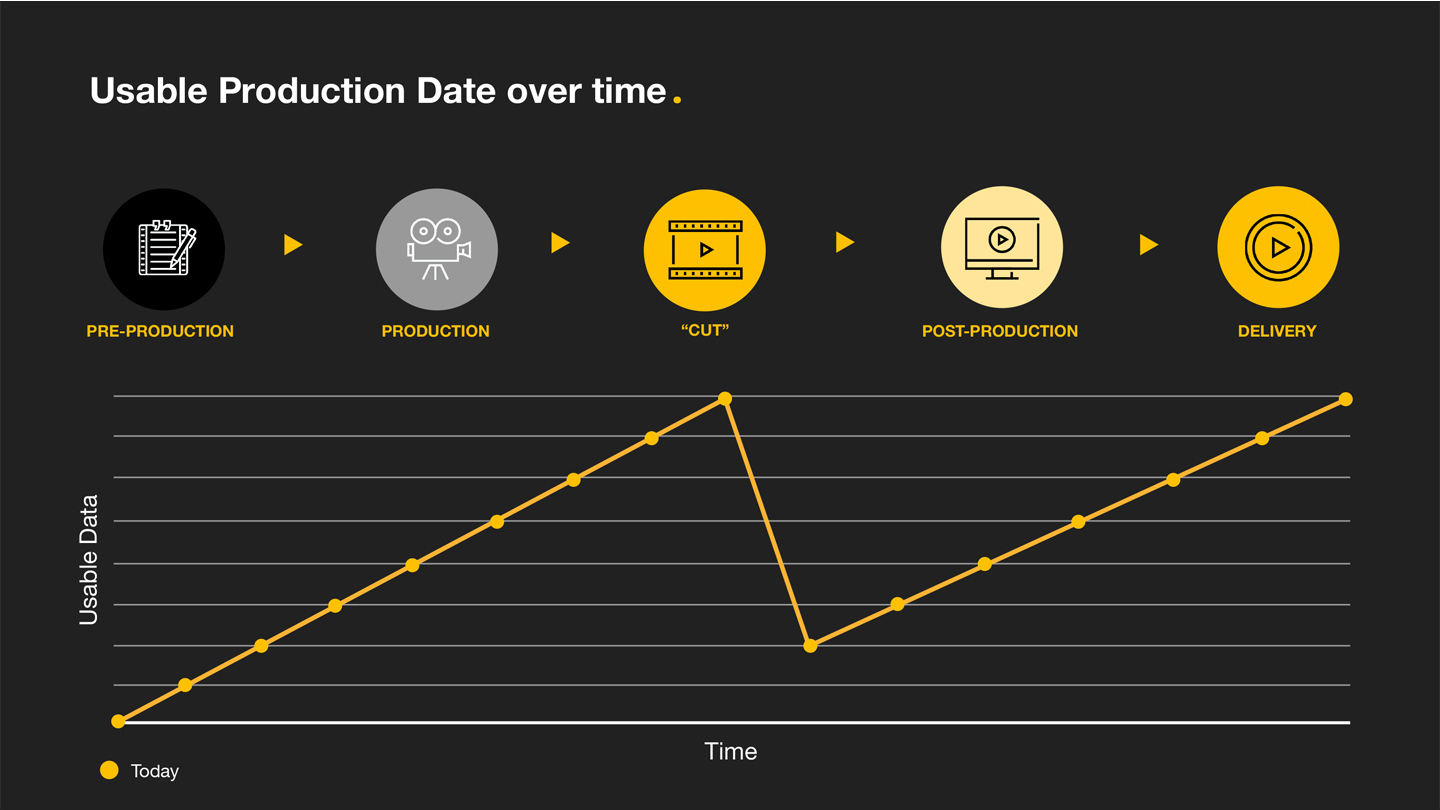

It’s a shift that Dan Ring, Head of Research at Foundry, has studied intently. “From our perspective, we’ve noticed that at some point around the middle, the moment just after principal photography has finished and the director yells CUT, there’s a gap,” he comments. “A distinct piece becomes missing between these two creative worlds.’

On this line of thought, this gap emerges, and resultant issues occur, when decisions are made on-set and the resulting data is discarded. This is often due to a lack of standards and tools to bridge the gap between pioneering virtual production technology and current post-production workflows.

Happily, Foundry have been hard at work investigating and mitigating this issue. “In my role as Head of Research, I look into my crystal ball and try to figure out what workflows of the future can be enabled if we solve some hard problems today,” Dan comments—a sentiment shared across the research team.

Below, we tap into their efforts to explore and discuss how creative decisions that flow consistently throughout the entire production chain can be enabled by real-time visualization and standards like USD, Hydra, and cloud technology.

Back to the Future

But first, let’s set the scene. We need to take it back to 2015 with Project Dreamspace—an EU-funded project in which Foundry tested end-to-end virtual production workflows, the results of which have since informed and steered our R&D efforts into this space.

Along with prominent industry partners such as Ncam, Intel’s Visual Computing Institute and FilmAkademie, we set out to develop tools that allow creative professionals to combine live performances, video and computer-generated imagery in real-time, and collaborate to create innovative entertainment and experiences. The idea was that if all the tools speak the same common language, all the way through to post—including on-set visualization—the subsequent consistency of data and decision making would significantly improve the efficiency of the entire project.

“The project was an experiment in connecting disparate technologies around the future of virtual production,” Dan comments. “Things like virtual camera tracking and back-projecting a perspective-adjusted virtual environment onto a screen.

Our role involved developing a real-time compositing graph, which took in a live SDI input and tracked camera, composed a 3D scene layout from Katana and finally rendered it all in our real-time renderer—all of which is very similar to modern virtual productions.”

Project Dreamspace was a success in as much as it brought to light many issues for the future of virtual production. The most fundamental of these being that the technical landscape was very fractured—there was no simple pipeline to facilitate easy virtual production, and no technology talked nicely to each other.

Lessons Learned

The results of Project Dreamspace, and the challenges that it uncovered for the future of virtual production, prompted Foundry to start thinking about how and where we could make an impact to solve these.

“We went to some of our customers using virtual production technology and techniques and held a small focus group,” Dan explains. “After general consensus that the landscape is very fractured, we posed the question: ‘Should we build a real-time virtual production tool for use on-set? For example, a real-time version of our flagship product, Nuke, to be used on-set, a tool that everything else could plug into that did live camera tracking, rendering and compositing?’

The answer was a resounding ‘No. Please don’t do that. But, instead, help us with something else.’”

Dan recalls: “They said, ‘help us move better and more efficiency from on-set into post. Give us a way to better capture all of the data that’s created on-set and ensure it gets to the right people later on’”.

These sentiments from the focus group reflect a wider challenge whereby, when on-set production wraps and the director yells CUT, any data generated will not usually persist into post-production. Such data often includes concept art, storyboards, layouts, models, preliminary textures, camera tracks, and so on—data that goes a long way in making an artist’s life easier, had they persisted into the later stages of the production pipeline.

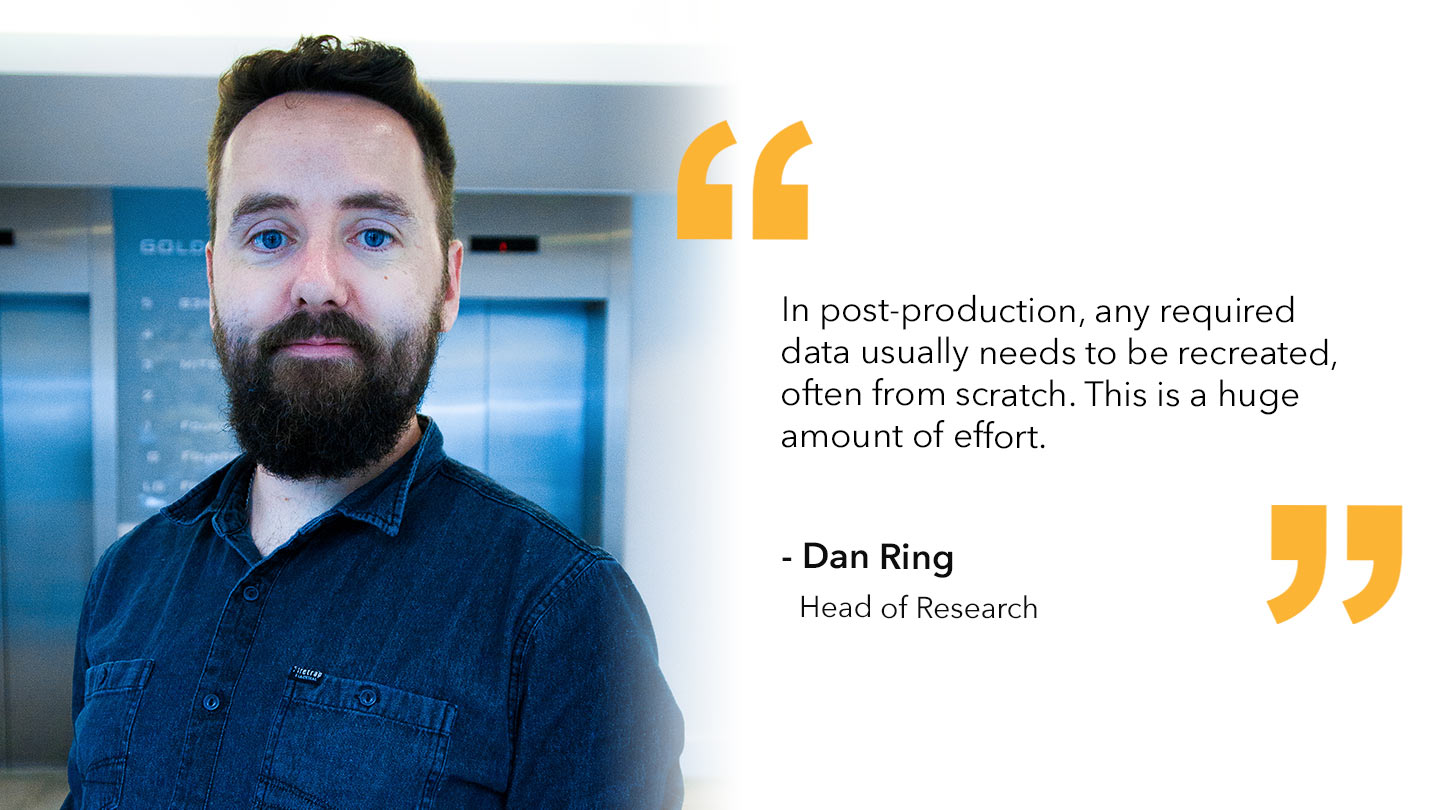

“Instead, in post-production, any required data usually needs to be recreated, often from scratch,” Dan explains. “This is a huge amount of effort. And it’s particularly magnified in virtual productions when so many of the assets have already been created, and the volume of data generated on-set is huge.

If there was a way of ensuring this data persisted better through the pipeline, you would save a lot of that effort.”

It’s a hard ask, but not insurmountable. The challenge boils down to bridging this gap between the real-time, highly creative data rich processes like virtual production, and the final realisation of the director’s imagination in post-production.

It’s a Magic Number

Foundry’s research team identified three elements that have the potential to successfully bridge this gap.

The first relates to a continuity of decision throughout the production pipeline, facilitated by open standards such as Universal Scene Description (USD). The idea here is that the same USD file is maintained from the start of production—for example, an initial 3D stage in previsualization—and continues along the pipeline as complexity is layered in at each stage, with its assets incrementally improved until they’re ready for use in virtual production. The key is that all important decisions and data persist through the pipeline.

The second fundamental element is a consistency of visualization. In a nutshell, once all data is available, there needs to be measures made to make sure we’re visualising the same thing in the same way, independent of software package, so that there exists an objective way of visualising an asset. Foundry’s research team sees this being solved by Hydra, a rendering framework which enables the visualization of 3D data—such as USD scene data—using multiple renderers. We’ve already seen its successes as expressed in Katana to encourage a consistency of visualization across look development and lighting teams, and we’re excited about the potential it harbors beyond this for whole pipelines.

Underpinning both of these is the third and final building block to this bridge...

Timeline of Truth

In essence, this is the idea that once you have a way to persist creative decisions and production data, as well as visualising that data objectively, there must be a single reference point for everything. That is, all of these decisions and data must be conformed to a ‘Timeline of Truth’. If Hydra is about visualizing production data at the asset level, the Timeline of Truth is about sequence level access to all data and decisions.

“We already live in a sequence-based world, and already do this for images and audio,” Dan comments. “Anyone familiar with editing software is able to naturally arrange video and audio clips on a timeline. The idea is that we now do this for all data types.”

Foundry’s inroads into making this a reality have already begun with project HEIST. Our aim as part of HEIST is to provide conformity, so that direction is preserved, communicated and reproducible through to the final pixels. Alongside this we hope to enable a new user base of VFX Editors, Data Wranglers and Digital Imaging Technicians to create workflows that deliver the vision to the VFX artist as intended by the creatives on-set.

Projects like HEIST explore how companies can capture on-set data, and make sure it’s correctly organised and exported to post-production in real-time. But this is only scratching the surface of where we want to go. Amidst increasing calls for the most important data formats generated onset—lens metadata, virtual camera tracks and so on—to be delivered onto a common timeline, further inroads into the project have been made.

“The ultimate vision for HEIST is that given any time-stamped data, we want to provide an open way of describing how the data can be interpreted and conformed onto a timeline,” Dan explains.

“For example, 3D positional data such as mocap or camera tracks could be described by USD, while a proprietary lens or camera metadata format might need a wrapper to specify start-times and end-times of a clip, and index into the metadata at a particular time.

In this way, we don’t need to know about all on-set data or file types. It becomes part of the virtual production ‘glue’ that you would have to create anyway.”

And now for the cherry on the cake: “When the director says ‘Cut’, all time-stamped data for that shot is wrangled onto the same timeline, and becomes the single source of truth.

Not only that, but because we know how to operate on all data, it can be edited, time-slipped, stretched, and viewed, just like you would image or audio data.

And finally, when it comes to sharing the data with other people, studios or vendors to be worked on, it can be easily exported, with the confidence that all available data is being shared in an open and accessible way.”

Strength in Numbers

As technology advances and we see a greater variety and volume of data, Foundry is more committed than ever to building bridges between creative, real-time workflows and modern visual effects and animation pipelines in the interest of keeping both as stable and open as possible.

But importantly, as Dan points out, “We need to all be speaking the same language before we can have real-time conversations.”

He continues: “In a post-Covid-19 world—where, ironically, we may be more connected than ever—everyone is wondering what that new world will look like. Distributed and remote work are likely going to be written into everyone’s disaster mitigation plans, and that means things have to change. Pipelines will need to work around people, and the key to that is adhering to open formats and standards.

Similarly, there will likely be a push to using cloud to achieve real-time workflows such as remote or distributed rendering, which of course relies on a stable pipeline.”

What has become abundantly clear for Dan and Foundry’s research team is that the visual effects industry is all in this together. “This is too great a problem for any one company to solve,” Dan comments. “We need to work together to remove silos. It means we need to actively work with bodies solving shared problems—whether that be the ASWF, or the VFX Reference Platform.”

Foundry is working hard to do our part—whether that be with our partners on projects like HEIST, or by incorporating real-time workflows into our products—to make open, stable pipelines of the future a reality.

Watch this space.

Want more on the latest in real-time technology?