Top three ways Near Real-Time is advancing workflows

Technology is an ever-changing giant. Just when you think you’ve got the hang of the latest advancement, something new comes along.

Not that we’re complaining. These advancements bring with them better and more efficient ways of work which, in a time of increasing quality demands and tightening deadlines, are a necessity.

Spearheading many of these improvements are virtual production and real-time. They’re both being used in more high-profile productions like The Mandalorian and their capabilities are extending more each year.

So, it’s not surprising that one of the latest tech advancements, Near Real-Time (NRT), was born out of these two areas.

NRT describes the process of starting post-production live during shooting. Using camera metadata and lens information, filmmakers are able to start visual effects (VFX) work almost immediately after the director has said ‘Cut!’.

The term was coined during the production of Comandante, a film that follows the true story of an Italian submarine commander in World War II. It was introduced to combat the high aspirations of the film’s director, who wanted control over how the final shots would look and not have to wait until post to see the final VFX.

The technique is set to change the way we work with real-time, giving filmmakers more confidence in their takes and enabling them to push the limitations of virtual production further than ever before. But what are the nuances of this emerging technique, and how is it set to improve our workflows?

Here are the top three ways Near Real-Time is changing the way we work.

Visualizing shots better

Creating high-quality visuals is at the top of every filmmaker's list. They want their work to look as visually beautiful as possible, no matter the style or genre. Comandante was no different. The director wanted to be able to see his work and, if means allowed, would have used completely practical effects and a real-life submarine in order to get the full effect of the film. But there were several limitations with this.

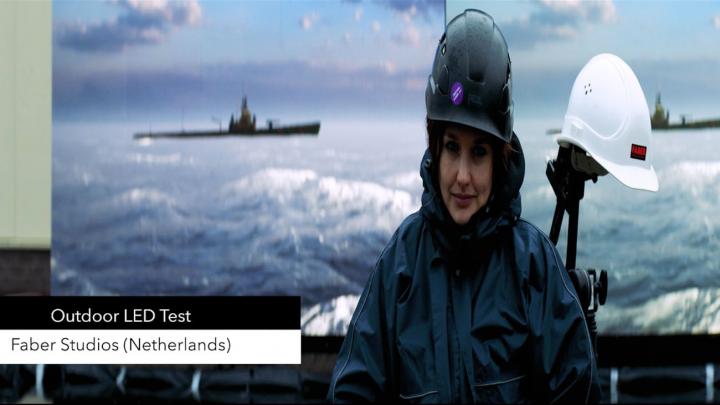

To start, it was physically impractical to take everyone to the middle of the ocean to capture the film. Blue or green screens also couldn’t be used as the reflections needed to appear correct when filming and the screen didn’t allow for these to be captured. High-resolution LED screens were also ruled out as they couldn’t be used with large water tanks and wouldn’t pull in all the elements needed in real-time.

Working with the team at Foundry, alongside the Irish studio Hi-Res, Cooke Optics, and 80six it was decided that low-res LED screens could be used. These would not only capture the integral light and reflections needed to make the shots realistic but could also be used for the real-time elements. The lower-resolution LED screen also had a massive effect on the budget as they’re less expensive than their higher-res counterparts. It is something that, in the future, we’re likely to use more in production as filmmakers can build and use large volumes of the lower-res panels without spending a fortune.

But how do lower-resolution LED screens help with the quality and visualization of shots?

During filming, all the camera metadata and lens information was recorded from the camera and streamed into Unreal. This data was then fed into Nuke, Foundry’s compositing tool. From there, the team was taken into post, on-set, and could use the UnrealReader node and CopyCat to render and then start compositing.

The UnrealReader tool enabled the filmmakers to re-render the background in high quality, obviating the need for the aforementioned higher-resolution LED walls. Once they had the high-res shots, they merged the virtual and physical using Nuke’s CopyCat tool, which uses machine learning to separate out the LED wall from the actors. In the end, the director was able to make decisions i.e what shots worked and what didn’t. Being able to see how the shot was going to look with all the VFX elements enabled the director to clearly visualize how the final product was going to look. From there, the production team can reshoot and adjust if necessary or move on to the next setup based on what they’ve seen.

Making better use of metadata

Metadata is very important in modern workflows for cinema but is often lost during the production process. Many filmmakers underestimate the importance of metadata which, particularly in modern lenses, gives the advantage of saving time and when stored correctly, also saves money and eases the reverse-engineering of the optics of a scene for the visual effects work.

When you have accurate lens metadata and lens maps, it enables filmmakers to get a headstart on finishing their VFX while giving them the distortion parameters of a lens. This is why metadata was a key component of the filming of Comandante.

Cooke, a lens manufacturing company, has instituted a program of empirically measuring every lens that they manufacture on a special machine that pixel-accurately quantifies every ray in the lens. A Cooke lens was used to film Comandante, specifically an anamorphic 40mil lens, and it made a huge difference as the team was able to put the anamorphic comps together in a reasonable period of time. This, alongside the implementation of Unreal and Nuke, ensured that all the data that would otherwise be lost was recorded and used to onset.

When we talk about metadata, it’s important to also take into account some of the challenges that still surround it. Metadata has to be tracked and kept in a way that’s transferable across the whole pipeline, not just through NRT but down to post. There isn’t a standard way that metadata is recorded in the industry, and therefore, it often gets lost along the way. What Near Real-Time does is ensure that all the metadata that’s needed for a shoot is tracked and stored in an efficient way as it is crucial to have that information in order to bring the VFX elements in and show the director the work in real-time.

The future does, however, look bright for lens metadata with companies like Cooke pushing for better lens metadata and NRT taking full advantage of its capabilities.

Non-linear production

The reshaping of production pipelines isn't a new thing, especially where virtual production and real-time are concerned. Slowly, pipelines have been straying from the traditional and moving towards a more circular and collaborative way of work. So, it is no surprise that Near Real-Time also plays a role in this shift.

With NRT, comp is happening on-set almost immediately after a shot has been recorded—it is not relegated to being the last stage in the pipeline, rather it happens as the project is being filmed. Post can start the second you begin principal photography if you want it to, and can inform the way you shoot something. Filmmakers are able to see the whole picture rather than waiting until post-production to know how their film will look.

It doesn’t have to stop there either. An in-camera comp is not your ultimate goal. It is the middle point of the process, just like it always has been, and you can go on in post as long as you need to. This means you can now finish some shots on the day of shooting, some in a couple of days and others by the end of the director’s cut weeks later.

This new circular way of work also doesn’t have to change the length of the production process. It can still be the ‘traditional’ length of time because post effectively began at the beginning of the shooting and, by the time you’ve finished filming, you almost have the director’s cut because of the work that has been done on set in real-time.

Taking Near Real-Time to the future

Near Real-Time gives filmmakers a flexible and interactive way of working and allows directors to make instant and informed decisions whilst shooting. While NRT is still a new way of working and very much in the early stages of development, we’re excited to see how the technique will be used in the future.

With the continuous development of workflows supported by new technologies, more advancements like Near Real-Time will progressively alter and improve the way we work, ultimately paving the way for more efficient production pipelines.