Machine Learning and Nuke 13.1: what’s next?

Earlier this year, Nuke 13.0 saw the introduction of its new machine learning (ML) toolset inside of Nuke. This included CopyCat and the Inference node which, since then, have become widely used and experimented with by many in the visual effects (VFX) industry.

But this was just the start.

Now, with the release of Nuke 13.1, we’re excited to bring new and updated ML features into the compositing tool, so artists can continue to harness the power of machine learning inside of Nuke.

So, what’s new?

When it came to making improvements to the machine learning toolset, the Nuke and Research teams knew it was important for artists to have more flexibility and compatibility when using the ML tools. That’s why Nuke 13.1 introduces new support for third-party PyTorch models in the Inference node.

With PyTorch being one of the leading machine learning frameworks, it’s a key part of many ML pipelines. The new support allows artists to load custom and third-party models into Nuke which, in turn, opens Nuke up to a vast range of community-generated machine learning models.

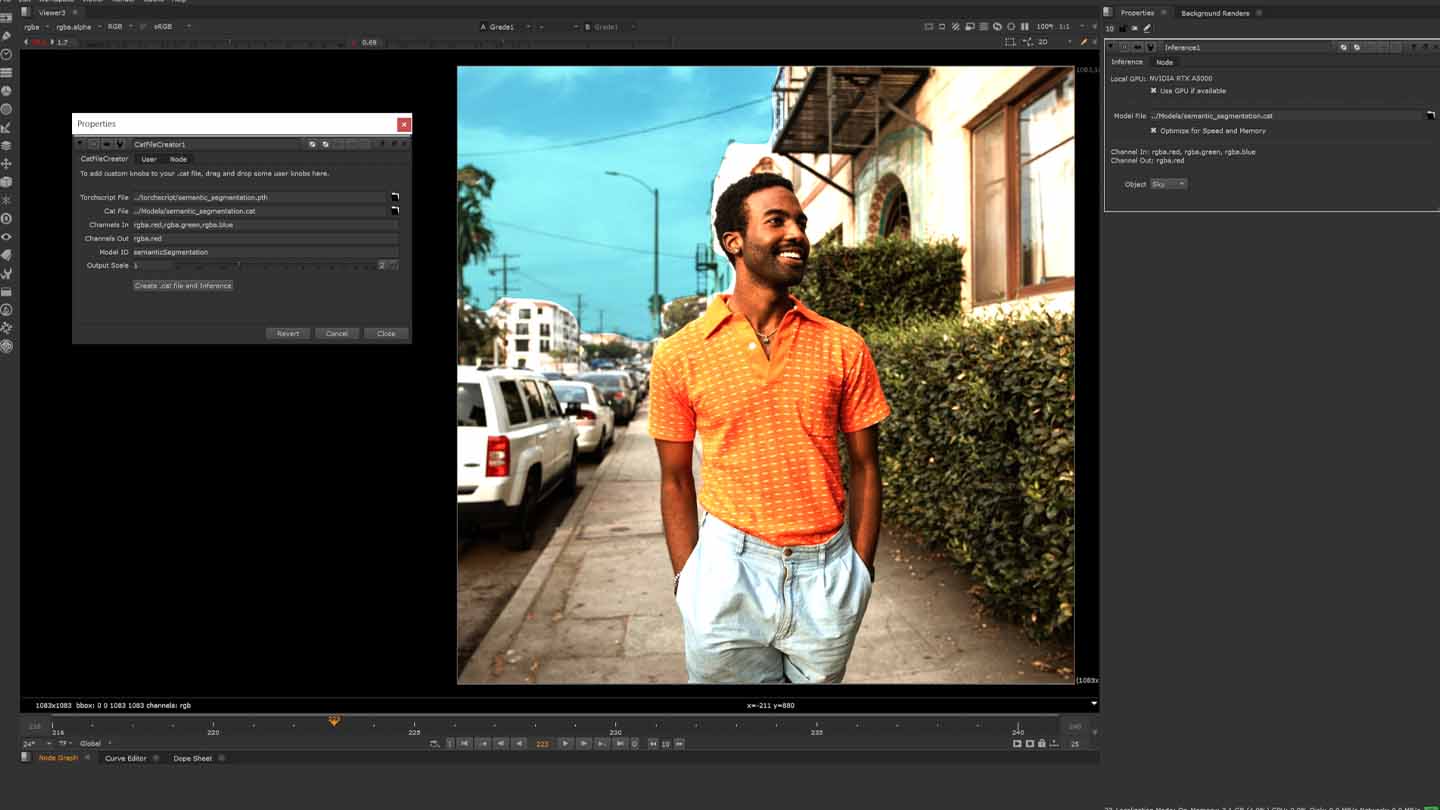

Using the new CatFileCreator node, PyTorch models can be converted into .cat files—the same format that CopyCat outputs–so that they can be loaded into Nuke. This simplifies the whole process as it means artists don’t have to go outside of Nuke to run their models. Plus, CopyCat now produces better results with fewer training steps, especially on higher resolution images.

While the upgrades coming to the ML toolset are exciting, what exactly do they mean for you and your workflow?

Removing pipeline friction

At Foundry, we want Nuke to empower artists to do more with the tools they have so they can excel and create pixel-perfect content—these upgrades to Nuke’s ML tools are a part of this. Not only does Nuke 13.1 bring a more pipeline-friendly toolset, but it removes friction that artists may have previously faced in their workflows.

The new support for third-party PyTorch models means that artists are no longer limited to using networks they have trained themselves using CopyCat, and can now use most image-to-image PyTorch networks natively in Nuke—a huge request from both studios and artists. As Ben Kent, Research Engineering Manager, tells us:

“We know that many R&D teams are coming up with great ML networks that they want to share with other teams and artists—these updates allow them to do that. Before people would’ve had to set up a Python environment, render their footage out of Nuke, process it and then re-import it into Nuke. With the updates to Inference, it all happens inside Nuke and makes it more pipeline-friendly.”

Nuke 13.1 also opens up Nuke to more machine learning networks. With GitHub being a popular place for academia to upload new technology, artists now have easier access to this. Artists can use these networks in Nuke, making it a more open platform.

“It [the updates to Inference] opens up Nuke to the world of machine learning tools [...] At Foundry, we don't want to be the guardians or gatekeepers for machine learning. We're going to keep working on our own tools, and make sure we’re not limiting what artists can do with them.”

Looking to the future

Although Nuke 13.1 has only just been released, the Nuke and Research teams are already thinking about the next round of improvements they can add to Nuke’s ML toolset, and are always keen for feedback from artists and studios. We want to continue to put artists at the heart of machine learning, making it more accessible with fewer limitations and ensuring that artists have complete control over their workflows.

All-in-all, Nuke 13.1 continues to open up Nuke to the world of machine learning tools and ensures artists feel empowered to take advantage of these developments, accelerating their work and extending what’s possible in their pipeline.