Volumetric video capture: challenges, opportunities and outlook for production pipelines

Volumetric imaging is crucial to immersive experiences, providing full 3D capture that is synonymous with virtual reality (VR) headsets such as the upcoming Oculus Quest 2.

As VR’s usage steadily grows across industries—not just in media and entertainment, but also sectors like architectural and product visualization—so too does volumetric technology need to grow alongside it to facilitate the next generation of high-quality immersive content.

Yet, whilst the potential of volumetric video capture is vast, its underlying technology remains a niche avenue of exploration, accessible only to a select number of companies and businesses.

High costs, coupled with technical barriers, means that volumetric research is primarily reserved for juggernauts with big teams and deep pockets that can aggregate the cost of capital. And when it comes to the practical application of volumetric, it’s not unheard of for these same companies to charge thousands per minute of production.

Ultimately, this makes it much more difficult for smaller businesses and set-ups to explore and experiment with volumetric video capture, in hopes of unlocking the full potential of this pioneering technology.

VoluMagic, Foundry’s collaborative, 18-month industrial research project, was born to address this issue. It aimed to re-conceptualize the volume reconstruction and editing process as an image editing one, in doing so offsetting the barriers mentioned above by making volumetric more accessible.

In this article, we explore the nuances of volumetric as a trend, how Foundry’s recently-wrapped VoluMagic project developed in response to and alongside it, and what’s on the horizon for this exciting technology.

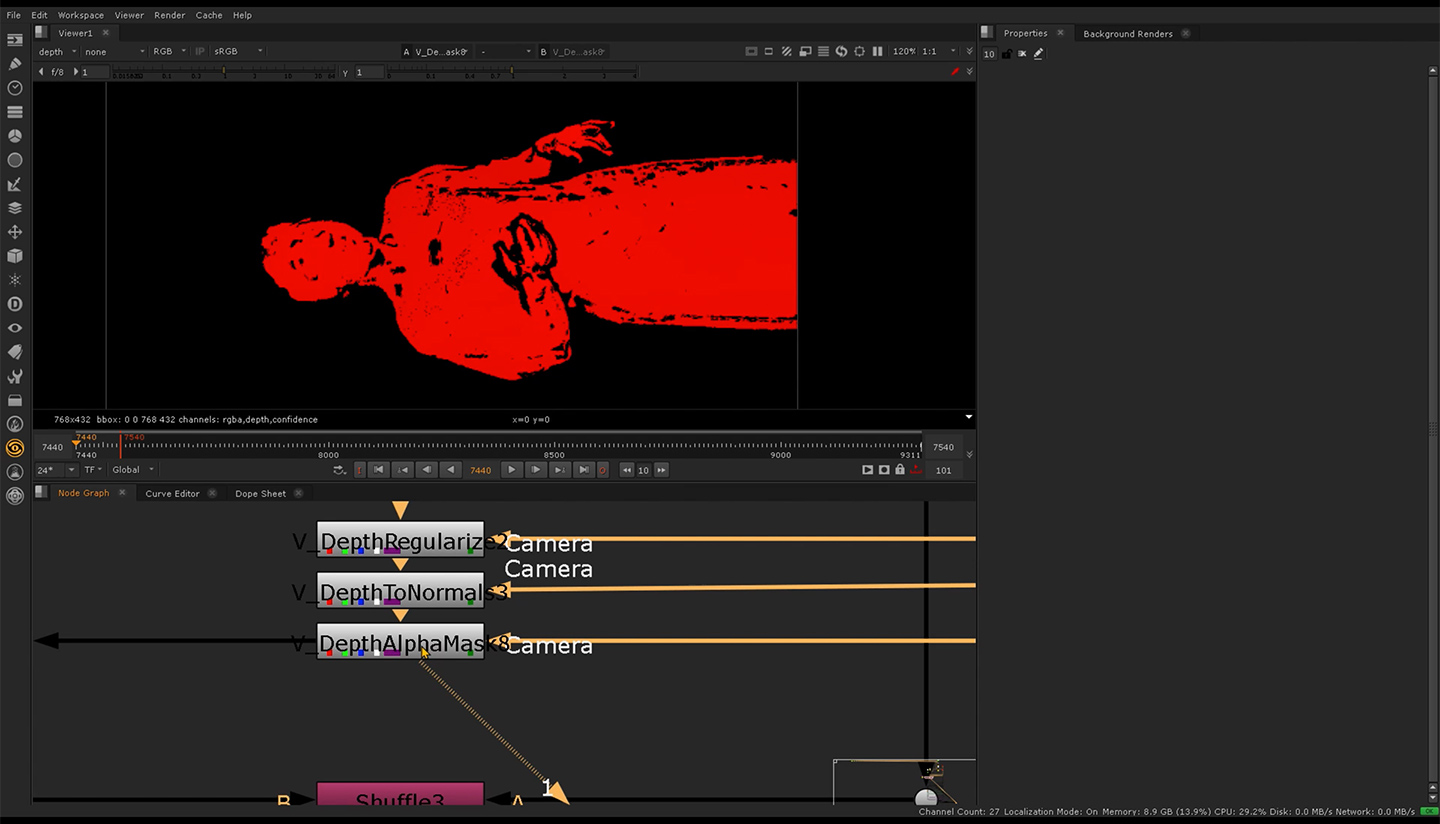

VoluMagic in Nuke

More than a movement

General appetite for volumetric capture technology remains high as 2020 wraps up and we head into a new year. According to a recent report, the volumetric video market is expected to grow from $578.3 million in 2018 to $2.78 billion by 2023, inline with burgeoning tech to facilitate this.

In a nutshell, volumetric video is the process of capturing moving images of the real world—namely, people and objects—that can be later viewed from any angle at any moment in time, to create compelling VR content. Paired with today’s powerful VR headsets, volumetric video transports audiences to entirely new realities, in which they hold complete autonomy over which shots and angles they view.

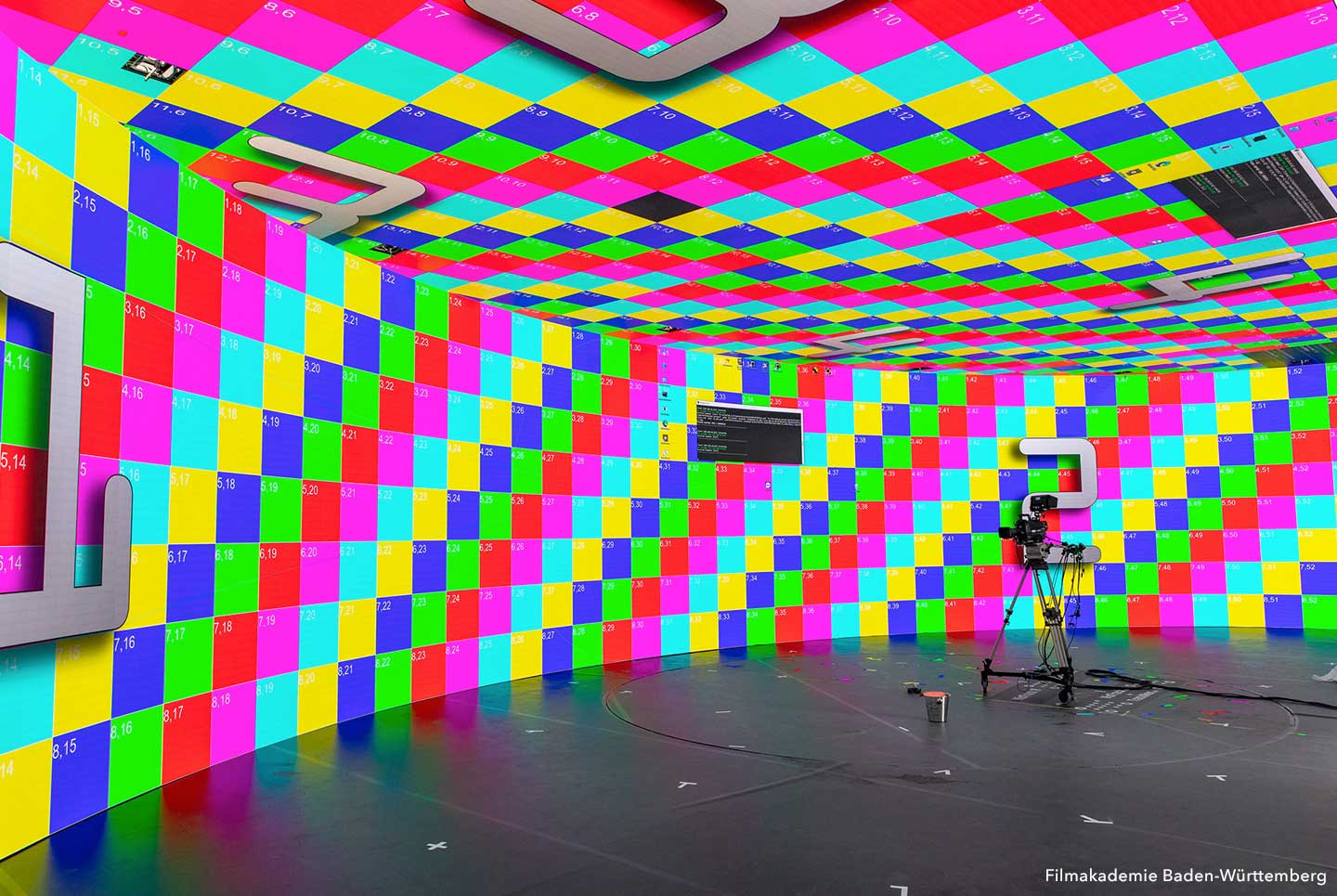

‘True’ volumetric capture involves using multiple witness cameras and achieving a volumetric reconstruction through these, without the use of a principal camera. Live performances are conducted in a large enough space and then shot from multiple angles, capturing all dimensions of the scene so that this can be replicated as a 3D experience.

Early pioneers of a form of volumetric capture include the team behind 2007’s Beowulf. Offered widely to theatres in 3D stereoscopic vision, the performances of the film are built on complex motion capture. This takes place on a huge 7.5m x 7.5m x 4.3m stage, dubbed ‘The Volume’, in a complete divergence from the usual ‘cut, action’ takes of traditional film.

Essentially, the entire performance is virtually filmed from every angle, without a principal camera, before being manipulated in post-production by the director, who can position the camera to capture the actual performances from any available angle.

The benefits of this are immediately tangible. Actors are not hindered by reloads, cuts, and camera or lighting set-ups. Longer, multi-actor takes are the result, lauded by John Malkovich as resembling theater performances much more than typical film shoots, giving actors of his calibre free rein to do what they do best.

From a practical standpoint, having multiple witness cameras to assist on-set results in needing fewer people to manage filming, which can offset the problem of rising production costs. What’s more, the combination of witness cameras and drones has the added advantage of capturing more data than just relying on a principal camera—data which can be shared across stages of production to tie them closer together.

Modern volumetric video capture builds on the above concept, proving the next logical extension of the MoCap volume used on Beowulf to better capture the performance in higher fidelity.

Barriers to success

On paper, it’s easy to see why volumetric capture was tipped as the ‘next big thing’ for the media and entertainment industries, and beyond. Tech giants such as Intel Studios lent their name to the technology, building a 10,000 square feet, 4-story capture stage—making it the biggest volumetric capture facility in the world.

Put into practice, however, and the expense and highly-technical nature of volumetric capture makes its application and usage by the ‘everyman’ more of a pipe dream than a reality.

It’s for this reason that there’s been a shift in focus for studios wishing to experiment with volumetric capture, to more en vogue methods of virtual production.

LED walls, such as those used on The Mandalorian and Westworld, are proving a popular alternative. Actors can be captured with a multi-camera set-up plus a principal camera in front of this LED wall, rather than a green screen, allowing for full 3D performance scanning that resonates more with traditional production methods. Nonetheless, LED walls remain a costly alternative, again reserved for big-budget productions.

A novel approach

Enter VoluMagic: Foundry’s recently-wrapped government-funded research project, kicking off in 2018 in collaboration with content creators Happy Finish. Together, Foundry and Happy Finish aimed to develop new, accessible tools that will progress the current state of live-action immersive content.

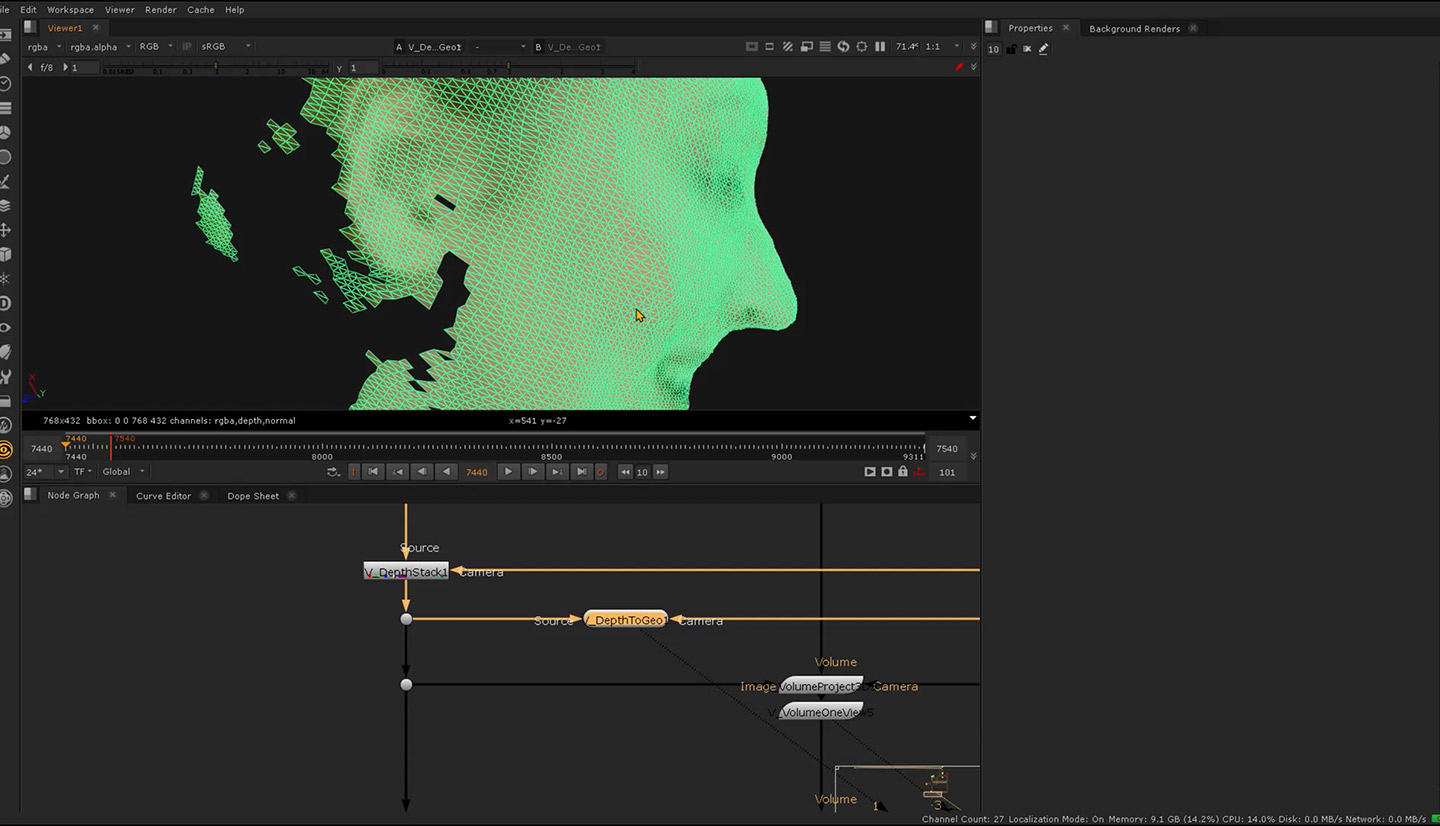

The vision of VoluMagic is to enable content creators to manipulate volumetric datasets in an environment that they’re already familiar with—compositing tool Nuke. By providing the components and building blocks to allow for the assembly of a volumetric system within Nuke, what was previously a niche technology can now be explored and utilised by a much wider base. Compositors are given the means of potentially solving post-production challenges with multi-view camera set-ups.

We previously explored the VoluMagic project extensively in its early stages, as things were gathering steam. But what’s happened since then—and as the project wraps, what key learnings have the team come away with?

Daniele Bernabei, Foundry’s Principle Research Engineer, lends his thoughts: “The main takeaway was about volumetric in particular,” he comments. “We were hoping to try and find a way to make volumetric editing in Nuke something easy to do for compositors. But when we started talking to people, we realised that this wasn’t something that fully fits the way they’re used to working in Nuke.”

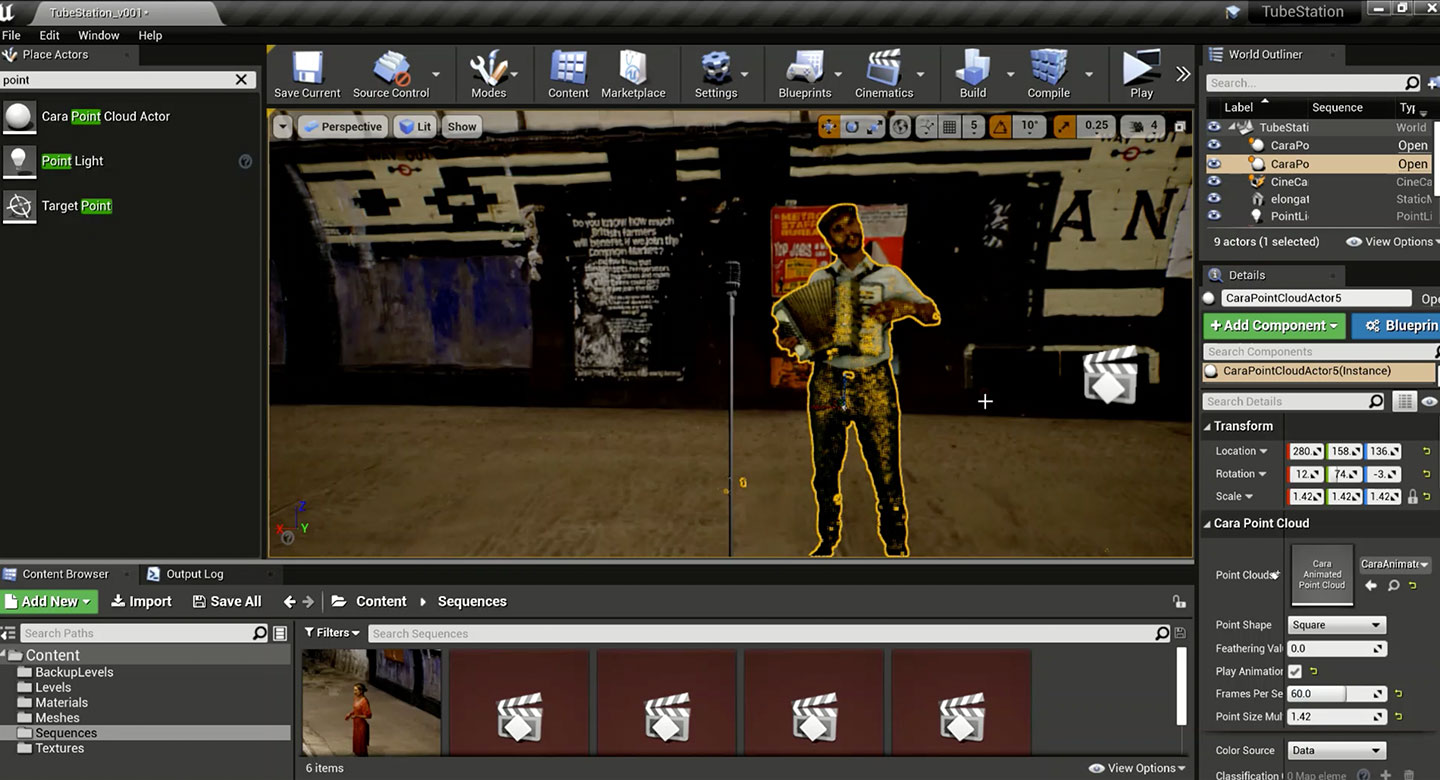

VoluMagic assets exported in Unreal Engine

“We also had to consider the various limitations of working with volumes and huge data structures. The issue arises when you start actually using volumetric video—you need a really high-spec computer with lots of memory, storage and so on. Large capture studios have capacity for this, but when people try to do this on their own machines, it doesn't work.”

The project took a turn at this point. Daniele explains: ”Instead of focusing on editing volumetric video, we had to first make sure that the data extracted from the cameras was as sound as possible, before giving people a way of manipulating this data.”

Happily, this yielded positive results. “We ended up creating some very fundamental building blocks for assembling a volumetric system, which in turn allows us to develop these and progress to the next stage.”

Final results of Foundry's VoluMagic project, running in Unreal Engine

Looking ahead

But what exactly does this next stage look like?

Speaking of the future of volumetric capture and Foundry, Dan Ring, Head of Research, is excited about what’s in store.

“The goal is to pivot towards assisting existing, traditional production,” he explains. “In this case, we’re still talking about principal photography. There would still be a director, and a DP, but you’re using extra data to augment the principal camera, enabling greater creative freedom on set, and richer ways of working with and improving shots in post.”

VoluMagic assets displayed on Looking Glass 3D monitor

Foundry is hard at work in achieving this goal. Coming soon is a set of nodes that can be added on top of Nuke which create a 3D representation of what was captured by multiple cameras, once these image inputs are ingested. These nodes will make up a plug-in, CaraVOL, due to be released as an alpha version for content creators and compositors to experiment, test and play with.

Long-term, Foundry’s research team plan on taking the learnings from the VoluMagic project and combining the concepts of volumetric video and light fields, with a focus on assisting both production and post-production. Watch this space!