5 top trends set to shape 2022—and beyond

A slow and steady recovery from the COVID-19 crisis has been mission critical for 2021. As we head into a new year, it’s hard to predict what the future holds for a world still hampered by the coronavirus pandemic.

One thing remains comfortingly familiar—the rapid pace at which technology continues to evolve and adapt, giving rise to new ways of working, thinking and creating. Here, then, are the top five 2022 trends set to affect the media and entertainment industry, and what they mean for content creators everywhere.

The Metaverse

Mark Zuckerberg’s ringing endorsement of the metaverse, coupled with Facebook changing its name to Meta, meant that the term and all it entails came barrelling into the common zeitgeist earlier this year.

At 2021’s Facebook Connect Main Keynote, Zuckerberg described the metaverse as “even more immersive and embodied internet" where "you're going to be able to do almost anything you can imagine—get together with friends and family, work, learn, play, shop, create—as well as entirely new categories that don't really fit how we think about computers or phones today."

Zuckerberg mentioned the term ‘metaverse’ 80+ times in a 90-minute presentation, admitting that “the best way to understand the metaverse is to experience it yourself, it's a little tough because it doesn't fully exist yet.”

What we do know is that the possibilities of the metaverse are endless. The common picture is a collective of avatars existing in one universe to interact with, along with the ability to own, create and exchange virtual property as you would physical property, existing within a shared universe featuring IPs from multiple companies. The final result may be full 3D telepresence via VR or AR glasses, Ready Player One-style.

Several technologies are combining to make the metaverse—also sometimes referred to as ‘Web 3.0’—gain steam. Advances in virtual and augmented reality, blockchain, cryptocurrencies and 5G networks combine to promise a metaverse that is immersive and decentralized. Later down the line, human augmentation in the form of brain-computer interfaces may further blur the lines between physical and virtual worlds.

What does all this mean for visual effects, animation and beyond?

Certainly new ways of creating content, to support new means of viewing from a much wider audience. Anticipating this, Unity’s acquisition of VFX giant Weta Digital comes as no coincidence—the deal is meant to unlock the full potential of the Unity engine for future content creators expanding into the metaverse.

The merging of different tech and tools as in such cases means further pipeline disruption can be expected, as the lines between production are blurred.

Yet there are some caveats that come with the rise of the metaverse. The question begs as to whether this next generation internet will mirror today’s, with user data monetised by proprietary platforms such as Facebook and Google, or whether it will reject the control of big tech and be more open and decentralized. Should the latter happen, then content creators can anticipate a level of never-before-seen artistic freedom.

Machine Learning

As expected, advances in AI continue to move at pace.

The emergence of low-code machine learning, enabling developers around the world to engage in the fundamentals of ML without the rigours of tricky coding, has made it more accessible than ever before, bringing about its adoption in bigger droves. Low-code ML platforms for beginners include Microsoft PowerApps, Visual LANSA and OutSystems. Google AutoML is a completely no-code platform that trains high-quality custom machine learning models with minimum effort.

Meanwhile, Foundry’s own NukeX and Nuke Studio feature updated AI tools as part of Nuke’s recent 13.1 release to accelerate the artistic process—namely new support for third-party PyTorch models in the Inference node.

With PyTorch being one of the leading machine learning frameworks, it’s a key part of many ML pipelines. The new support allows artists to load custom and third-party models into Nuke which, in turn, opens Nuke up to a vast range of community-generated machine learning models.

Other key compositing tasks, such as keying and rotoscoping, can be mastered by machine learning. Just this year, Foundry’s research team wrapped project SmartROTO, which aimed to speed up the traditionally time-consuming rotoscoping process via artist-assisted machine learning. The idea was that artists would create a set of shapes and a small set of keyframes, and SmartROTO—specifically, the ML tech behind it—would speed up the process of setting intermediate keyframes across the sequences.

Whilst productization of SmartROTO is still a long way off, the story behind the project stands as part of Foundry’s ongoing efforts to make ML accessible to artists by taking complicated algorithms and making them available to solve hard technical problems in an artist-friendly way.

Elsewhere, key movers and shakers in the ML space include Digital Domain, whose proprietary facial capture system Masquerade 2.0 can deliver a photorealistic 3D character and dozens of hours of performance in a few months. Meanwhile, at Nvidia’s November 2021 conference, CEO Jensen Huang unveiled Nemo Megatron—reportedly the biggest high-performance computing application ever used to train large language models. NVIDIA Modulus was also announced, designed to build and train ML models to obey the laws of physics.

Man or machine, there’s certainly an exciting future ahead for the advent of automation and AI.

Animation

Between 2022-26, the animation and visual effects (VFX) industries are expected to grow by 9%, with the global 3D animation market expected to grow at a significant pace thanks to

increasing adoption by several key players in the industry. These include DNEG, Rodeo FX and Sydney-based Flying Bark, whose recent accolades include work on Marvel’s What If…?.

With the global 3D animation market predicted to reach $15,880 million by 2025, we can expect to see much more of it on our screens and streams and, thanks to technological advances in the VR space, in a completely new reality.

Due to advancements in the technology essential to VR, new tools are emerging capable of producing professional, high-quality media in innovative ways. Features like Quill in Oculus means more animators are investing in VR to create animated content, eschewing the usual method of 3D modelling on a screen and instead diving into the thick of it in order to make things to scale.

Key animated VR shows to watch out for include Battlescar for Oculus Quest and Rift, Baba Yaga—an interactive experience exclusive to the Oculus Quest, and Gloomy Eyes, a three-part love story between a zombie boy and a human girl in a town devoid of sunlight, inspired by Tim Burton’s The Nightmare Before Christmas.

Real-time Workflows

Game engines and virtual production continue to make waves in the visual effects and animation industries. The appeal lies in one critical question: what if you could translate your ideas into images, as fast as you have them?

For filmmakers interested in real-time technology and virtual production, this is the holy grail—the ability to work in real-time, iterate in photo realistic environments, and do anything you want in the moment. Tools that can match the speed of thought would allow almost limitless experimentation and unfettered creativity.

LED volumes, game engines and in-camera VFX: each can be deftly used by filmmakers and directors to create compelling content, imagine worlds and iterate on shots and sequences. Aaron Sims Creative, who have long relied on traditional methods to deliver content, is just one studio who has pivoted to realtime techniques in the making of Dive, a new series of films with game engines at their core.

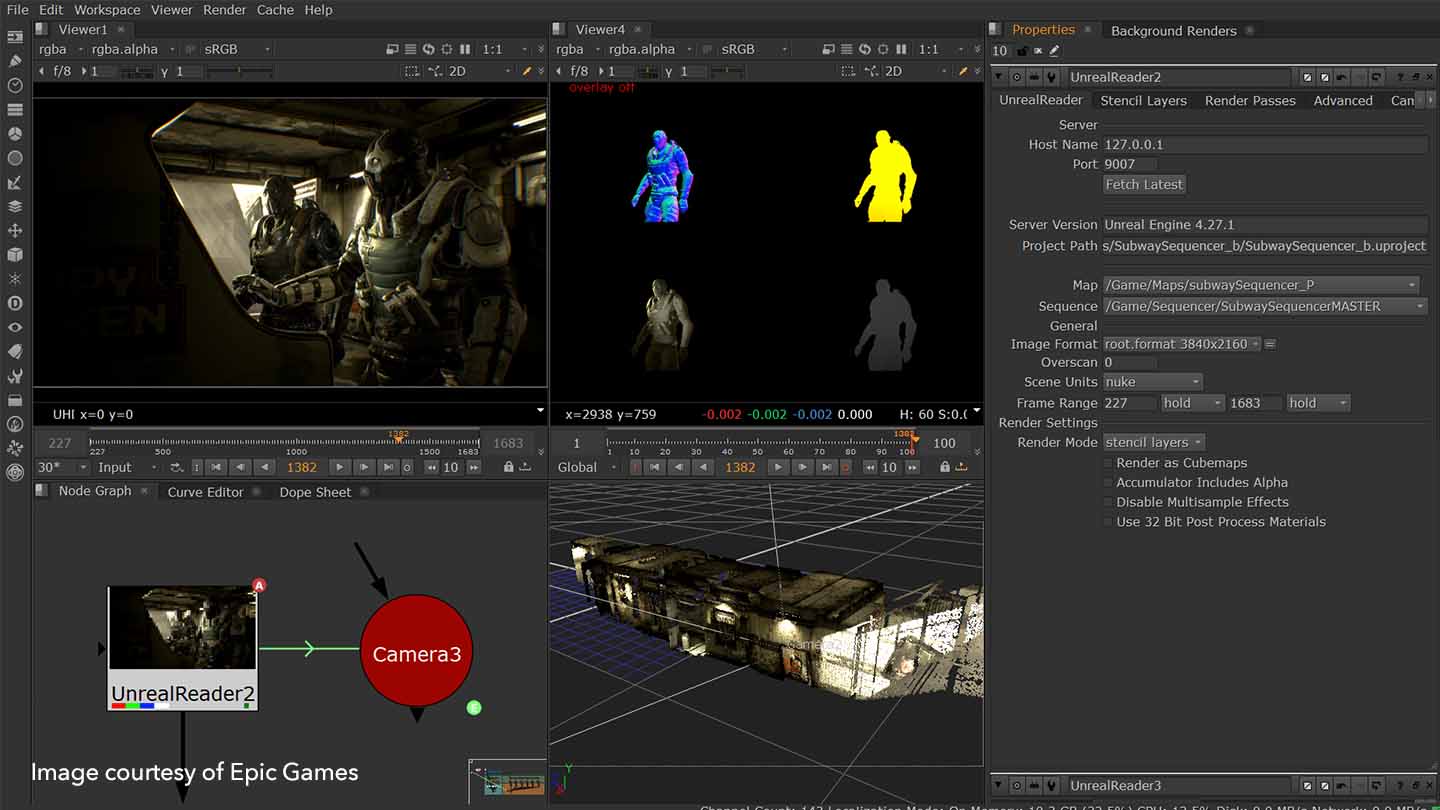

The increasing use of game engines and real-time workflows was one of the drivers behind the development of Nuke’s UnrealReader Node, released in 13.1. It comes as a boon to artists using both Unreal Engine and Nuke in conjunction with each other, empowering them to be more creative in their compositing by removing pipeline friction and bringing the power of Nuke into real-time projects.

Artists can easily request image data and utility passes from the Unreal Engine, directly from NukeX, enabling them see all the latest Unreal changes directly in their Nuke script while they refine the image using Nuke’s powerful compositing toolset.

Real-time technology continues to dominate discussions around content creation and how it combines with traditional post-production methods. Speaking at December’s 2021 GTC conference, key Foundry figures are excited to host The Reality of Real-time, in which they’ll present an on set workflow that delivers advanced post-production in near real-time, allowing immediate review by the film-makers, and post-production to begin the moment the director says ‘cut’—a workflow that looks more and more likely as we move into 2022.

The push towards open standards

Open source technology continues to be a hot topic as we move into 2022. It’s no wonder as to why: the potential it holds for fostering more collaborative relationships and workflows across artists, teams and studios is undeniable. Open standards are arguably more important than ever in a world disrupted by COVID-19, wherein remote work is now typically the de facto standard for many businesses.

Once a technology is open source, it’s open to everyone. Organizations are given the opportunity to write and support their own bespoke software, before freely sharing this with other organizations, without having to pay a third party license.

The benefits of this are immediately tangible in driving the move towards open standards. Open source technology is capable of connecting multiple areas of the pipeline and different systems together, allowing data to flow efficiently throughout—from standard file formats to integration between applications and beyond.

Projects such as OpenColorIO, OpenEXR, and OpenVDB are instrumental in making it easier for studios to collaborate with each other on major VFX films. Meanwhile, Universal Scene Description (USD) continues to change the face of VFX as an open standard proving pivotal in encouraging smart, collaborative and parallel department workflows, which are themselves crucial to realising creative intent quickly with real-time feedback.

Indeed, USD looks more important than ever as the prospect of a metaverse becomes more and more tangible. Described as the ‘HTML of 3D’ by Richard Kerris, vice president of Nvidia’s Omniverse platform during a press briefing ahead of its 2021 GPU Technology Conference, USD enables sharing of 3D assets to render the virtual worlds synonymous with the metaverse. Virtual sets, animations, materials, and other 3D assets can be seamlessly exchanged across applications, and collaborative scene building is also supported by USD, perfect for constructing virtual environments, worlds and realities.

World, watch out—The Matrix may be closer than we think, supported by standards that are open, customizable and collaborative.

Want more on the latest trends straight to your inbox? Sign-up for our Insights newsletter